The 2nd Law#

#

1 NATURE’S DISSYMMETRY#

War and the steam engine joined forces and forged what was to become one of the most delicate of concepts. Sadi Carnot, the son of a minister of war under Napoleon and the uncle of a later president of the Republic, fought in the outskirts of Paris in 1814. In the turmoil that followed, he formed the opinion that one cause of France’s defeat had been her industrial inferiority. The contrast between England’s and France’s use of steam epitomized the difference. He saw that taking away England’s steam engine would remove the heart of her military power. Gone would be her coal, for the mines would no longer be pumped. Gone would be her iron, for, with wood in short supply, coal was essential to ironmaking. Gone, then, would be her armaments.

But Carnot also perceived that whoever possessed efficient steam power would be not only the industrial and master of the world, but also the leader of a social revolution far more universal than the France had so recently undergone. Carnot saw steam power as a universal motor. This motor would displace animals because of its greater economy and would supersede wind and water because of its reliability and its controllability. Carnot saw that the universal motor would enlarge humanity’s social and economic horizons, and carry it into a new world of achievement. Many people today can see the early steam engines, those cumbersome hulks of wood and iron, only as ponderous symbols of the squalor and poverty that typified the newly emerging industrial society. In fact, those earthbound leviathans proved to be the wings of humanity’s aspirations.

Carnot was a visionary and a sharp analyst of what was needed to improve the steam engine (as his father had been an acute analyst of mechanical devices), but he could have had no inkling of the intellectual revolution to which his technologically motivated studies would lead. In discovering that there is an intrinsic inefficiency in the conversion of heat into work, he set in motion an intellectual mechanism which a century and a half later embraces all activity. In pinning down the efficiency of the steam engine and circumscribing its limitations, Carnot was unconsciously establishing a new attitude toward all kinds of change, toward the conversion of the energy stored in coal into mechanical effort, and even toward the unfolding of a leaf. Moreover, he was also establishing a science that went beyond the apparently abstract physics of Newton, one that could deal with both the abstractions of single particles and the reality of engines. All this encapsulates the span of topics in this book: we shall travel from the apparently coarse world of the early industrial engine to the delicate and refined world of the enjoyment of beauty, and in doing so we shall discover them one.

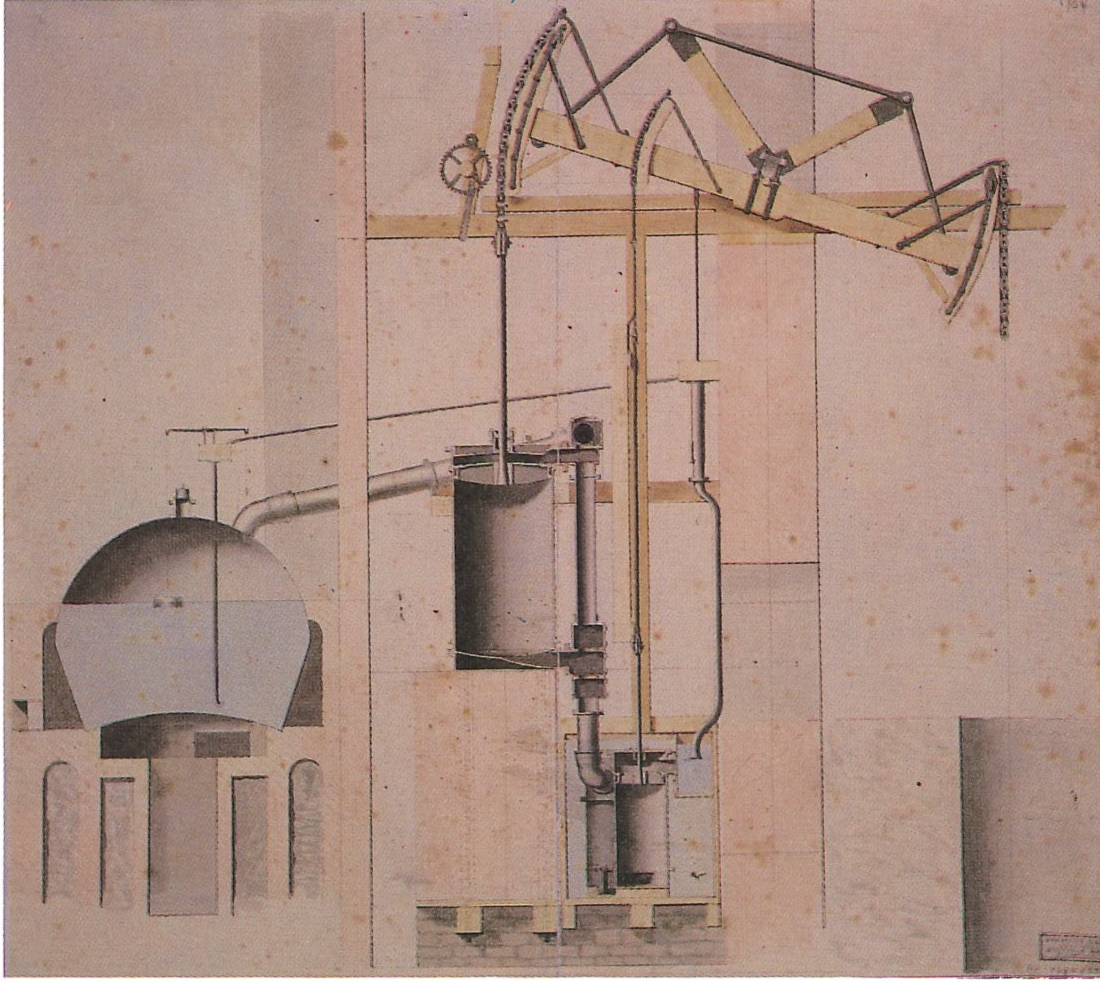

On of the earliest steam engines. Its analysis stimated the ideas we explore in this book.#

Carnot’s work (which was summarized in his Refléxions sur la puissance motrice du feu, published in 1824) was based on a misconception; yet, even so, it laid the foundations of our subject. Carnot subscribed to the then-conventional theory that heat was some kind of massless fluid or caloric. He took the view that the operation of a steam engine was akin to the operation of a water mill, that caloric ran from the boiler to the condenser, and drove the shafts of industry as it ran, exactly as water runs and drives a mill. Just as the quantity of water remains unchanged as it flows through the mill in the course of doing its work so (Carnot believed) the quantity of caloric remained unchanged as it did its (Carnot believed) the quantity analysis on the assumption that the quantity of heat was conserved, and that work was generated by the engine because the fluid flowed from a hot, thermally “high” source to a cold, thermally “low” sink.

The intellectual effort needed to disentangle the truth from this misconception had to await a new generation of minds. Among the generation born around 1820, there were three people who would take up the challenge and resolve the confusion.

The Identification of Energy#

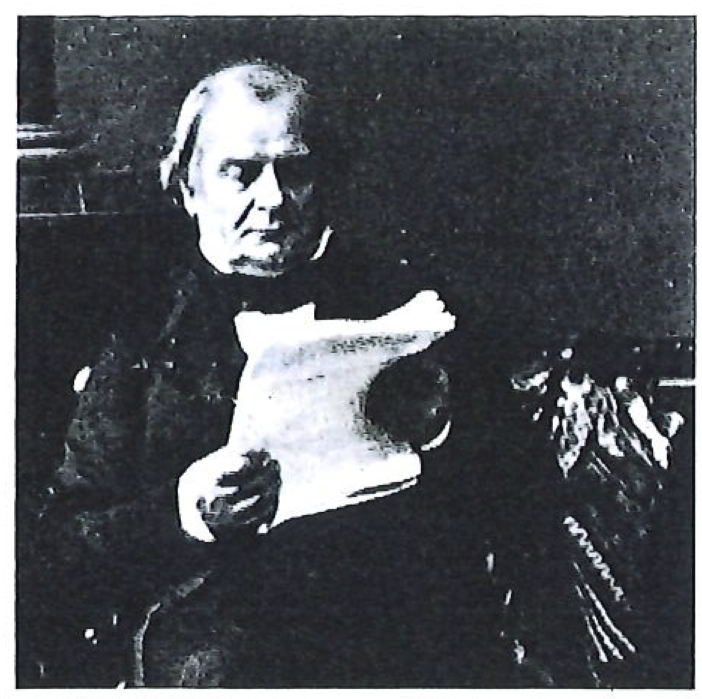

The first of these three was J. P. Joule, born in 1818. Joule was the son of a Manchester brewer. His wealth, and the brewery’s workshops, gave him the opportunity to follow his inclinations. One such inclination was to discover a general, unifying theme that would explain all the phenomena then exciting scientific attention, such as electricity, electrochemistry, and the processes involving heat and mechanics. His careful experiments, done in the 1840s, confirmed that heat was not conserved. Joule showed by increasingly precise measurements that work could be converted quantitatively into heat. This was the birth of the concept of the mechanical equivalence of heat, that work and heat are mutually interconvertible, and that heat is not a substance like water.

James Prescott Joule (1818-1889)#

Such was the experimental evidence that upset the basis for the conclusions, but not the conclusions themselves, that Carnot had drawn a generation before. Now it was time for the theoreticians to take up the challenge and to resolve the nature of heat.

William Thomson, Lord Kelvin (1824-1907)#

William Thomson was born in Belfast in 1824, moved to Glasgow in 1832, and entered the university there at age ten, already displaying the intellectual vigor that was to be the hallmark of his life. Although primarily a theoretician, he had great practical ability. Indeed, his wealth sprang from a practical talent, which he polished by a brief sojourn in Paris after he graduated from Cambridge, where he had gone in 1843. His career at Glasgow resumed in 1846, when at 22 he was appointed to the chair of natural philosophy. He divided his time between theoretical analysis of the highest quality and moneymaking of enviable proportions from his work in telegraphy. Great Britain’s preeminence in the field of international communication and submarine telegraphy can be traced to Thomson’s analysis of the problems of transmitting signals over great distances, and his invention (and patenting) of a receiver that became the standard in all telegraph that became the standard in all telegraph offices.

William Thomson, as is sometimes the confusing habit of the British, later matured into Lord Kelvin, by which name we shall refer to him from now on. His wealth and his practical attainments have now been largely forgotten. What remains as his lasting memorial, apart from a slab in Westminster Abbey, is his intellectual achievement.

Kelvin and Joule met at the Oxford meeting of the British Association for the Advancement of Science in 1847. From that meeting Kelvin returned with an unsettled mind. He was reported as being astounded by Joule’s refutation of the conservation of heat. Although impressed with what Joule had been able to demonstrate, he believed that Carnot’s work would be overthrown if heat were not conserved, and if there were no such thing as caloric fluid.

Kelvin began by setting forth the conceptual tangle that appeared to be confronting physics. He went on to develop the view (published in his paper On the dynamical theory of heat in 1851) that perhaps two laws were lurking beneath the surface of experience, and that in some sense the work of Carnot could survive without contradicting the work of Joule. Thus emerged the study, and the name, of thermodymamics, the theory of the mechanical action of heat, and the beginnings of the realization that Nature had to pivots of action.

The third significant mind born in the decade of the 1820s was that of Rudolf Gottlieb. Few students of thermodynamics know this name, for Gottlieb adopted a classical name, as was then a popular affectation. We, henceforth, shall refer to him as Clausius, the name by which he is universally known.

Rudolf Clausius (1822-1888)#

Clausius was born in 1822. There should be nothing surprising in the fact that these three shapers of thermodynamics were contemporaries. Thermodynamics was the object of intellectual ferment of the time, and bright minds are attracted to bright possibilities. Clausius’s first contribution cut closer to the bone than had Kelvin’s. In dealing with the theme inspired by Carnot, carried on by Joule, and extended by Kelvin, in a monograph that was titled Über die bewegende Kraft der Wärme when it was published in 1850, Clausius sharply circumscribed the problems then facing thermodynamics, and in doing so made them more open to analysis. His was the focusing mind, the microscope to Kelvin’s cosmic telescope.

Clausius also saw that the case of Carnot vs. Joule could to some extent be resolved if there were two underlying principles of Nature. He refined Carnot’s principle, and rid the world of caloric, but he went further: although he carefully insulated his general conclusions from his speculations, he did go on to speculate on how heat could be explained in terms of the behavior of the particles of which matter is composed. That was the dawn of the modern era of thermodynamics.

Carnot was born in 1796 and died of cholera in 1832; by then he had let slip his belief in the reality of caloric. Joule, Kelvin, and Clausius were born in the period 1818-1824, and their generation thrust thermodynamics onto the intellectual stage. But it needed a third generation to unify this new discipline, and to attach it to the other currents of science which by then were starting to flow.

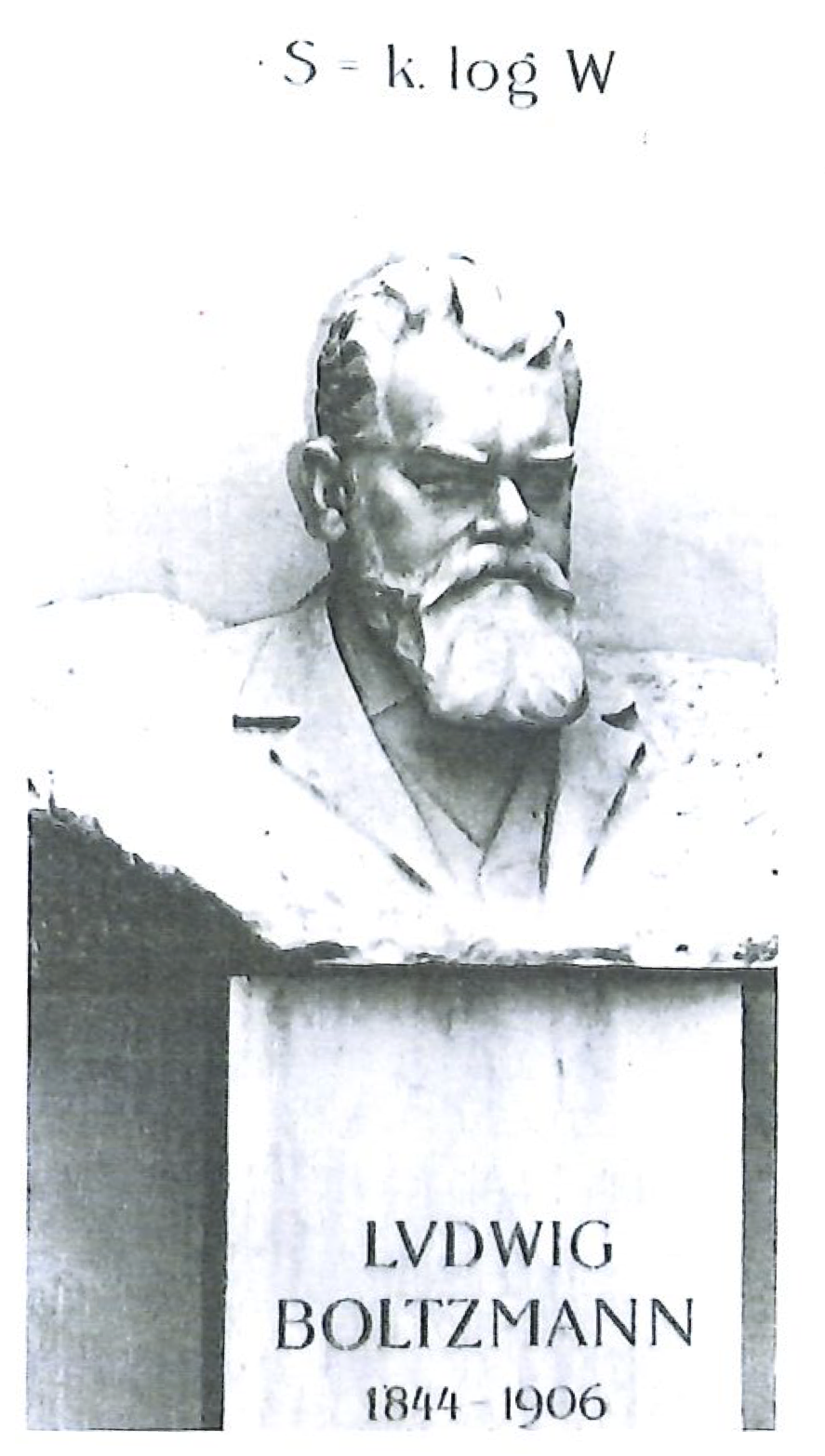

Ludwig Boltzmann was born in 1844. His contribution was to forge the link between the properties of matter in bulk, then being established by the deployment of Kelvin’s and Clausius’s thermodynamics, and the behavior of matter’s individual particles, its atoms. Kelvin, Clausius, and their contemporaries developed the seed planted by Carnot, and were able to establish a great warehouse of relations between observations. However, comprehension of these relations could come only when a mechanistic explanation in terms of particles and their properties had been established.

Boltzmann perceived that identifying the cooperation between atoms which showed itself to the observer as the properties of bulk matter would take him into the innermost workings of Nature. Though short-sighted, he saw further into the workings of the world than most of his contemporaries, and he began to discover the deep structure of change; furthermore, he did all this before the existence of atoms was generally accepted. Many of his contemporaries doubted the credibility of his assumptions and his argument, and feared that his work would dethrone the purposiveness which they presumed to exist within the workings of the deeper world of change, just as Darwin had recently dispossessed its outer manifestations. unhappiness and killed himself.

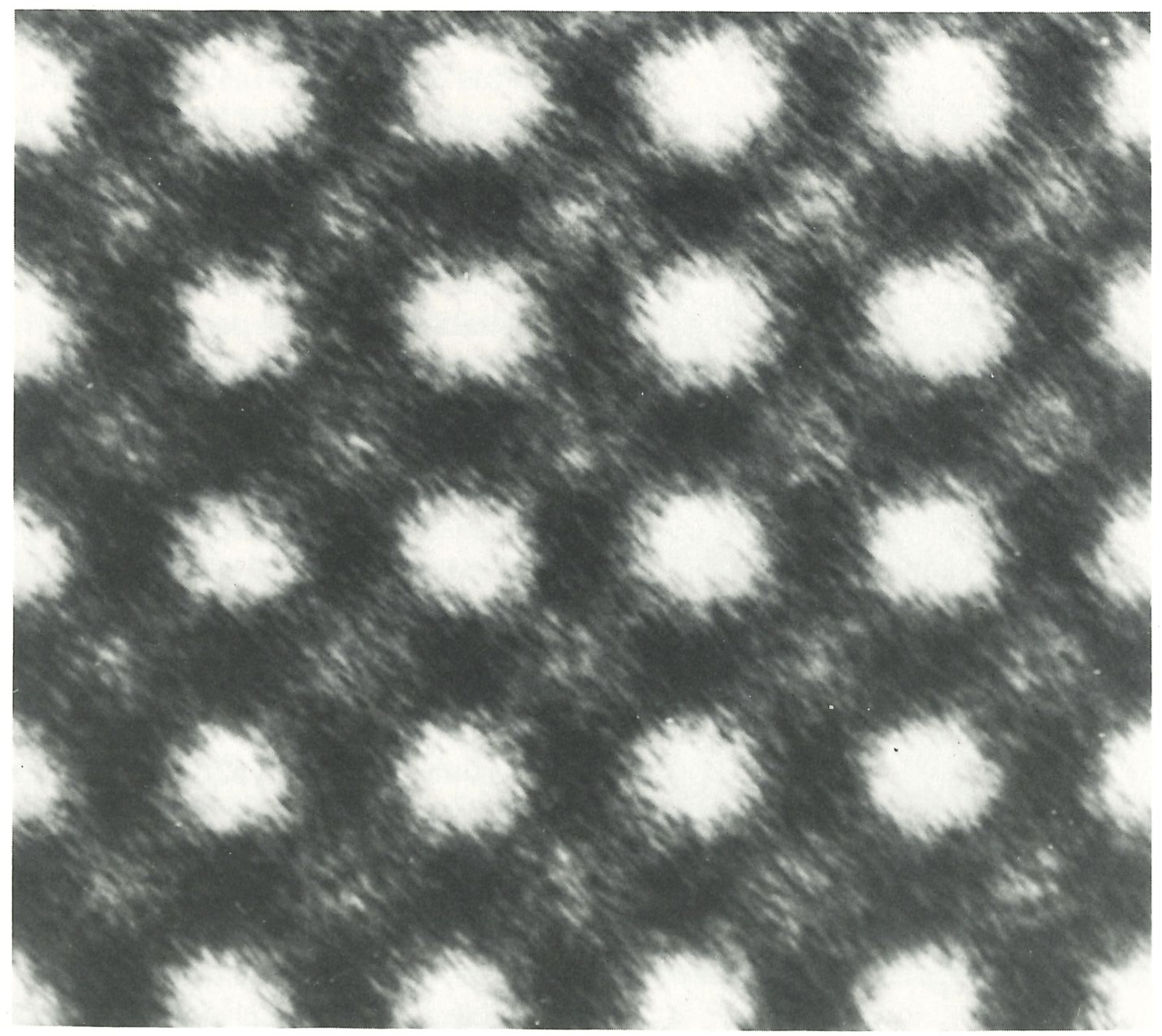

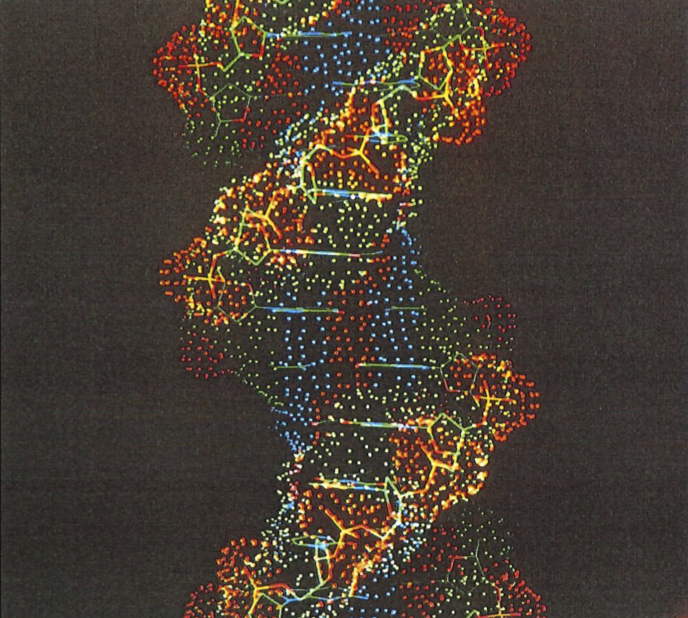

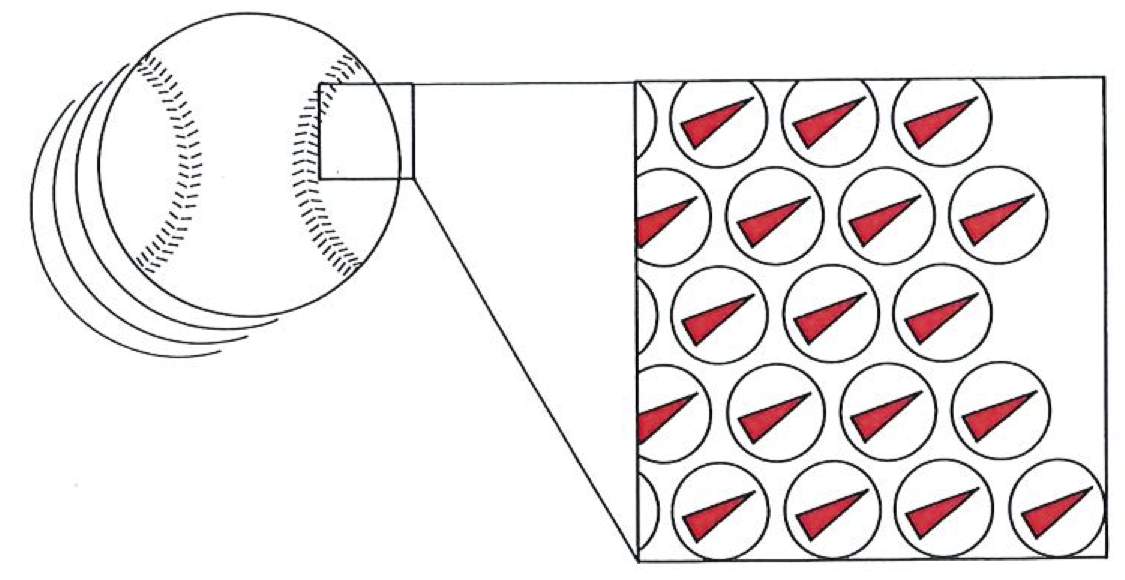

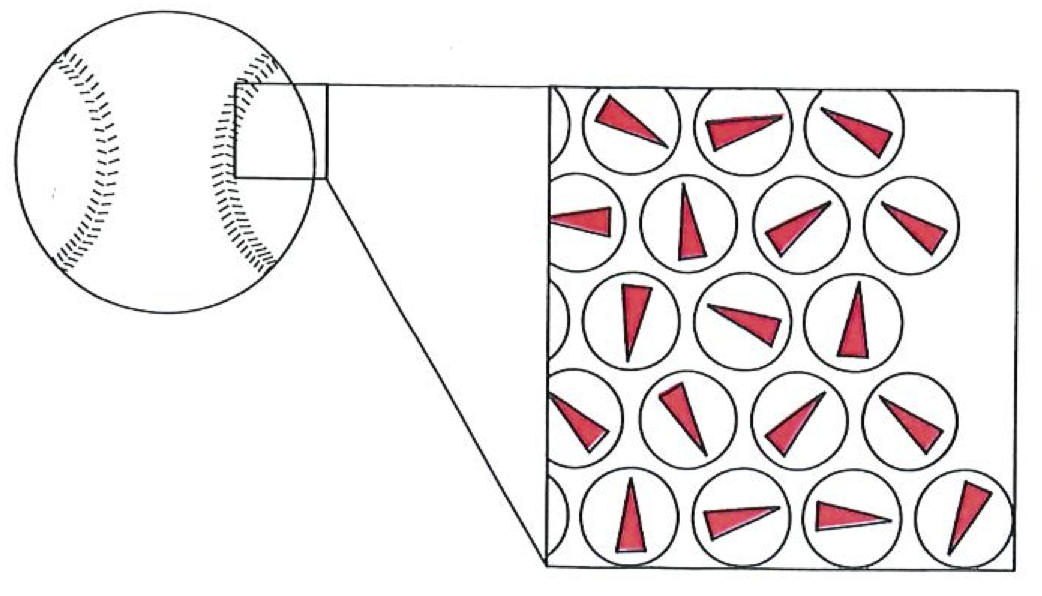

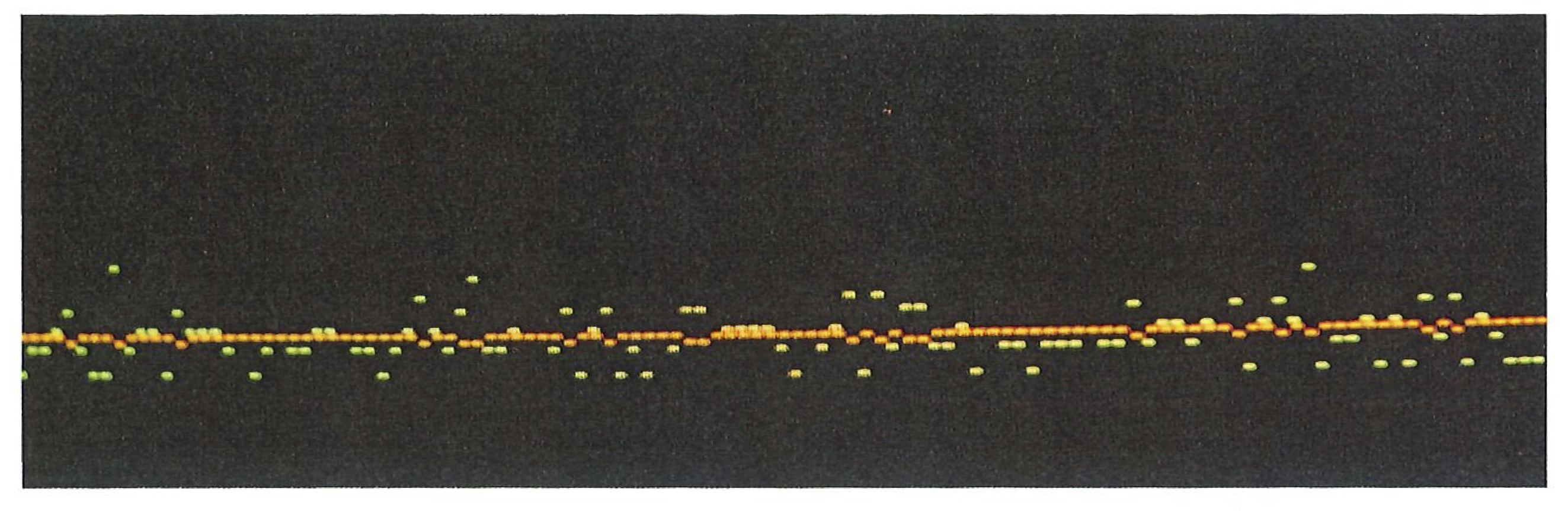

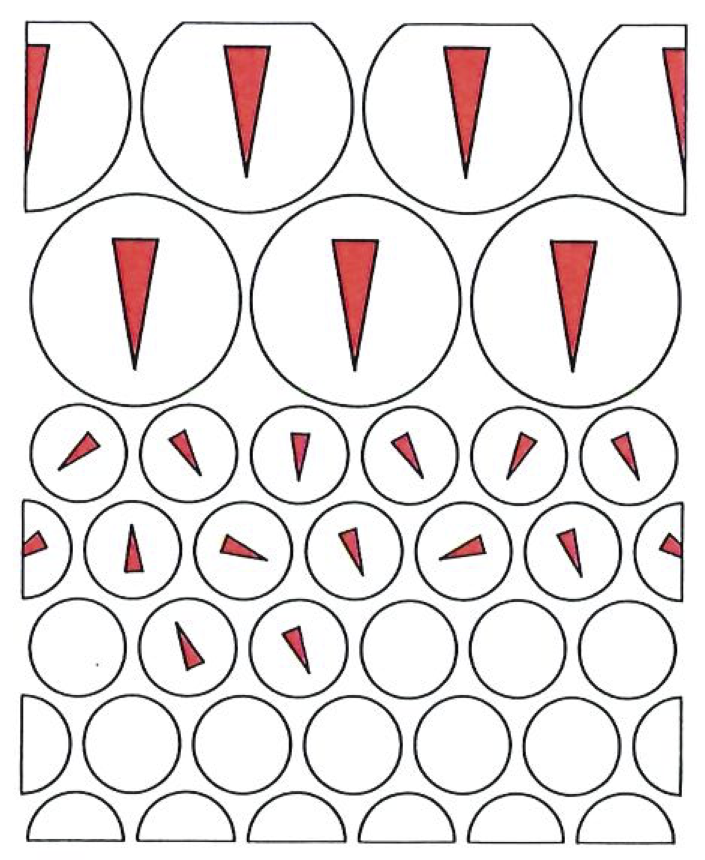

In 1906, when Boltzmann died, ideas were in the air, and techniques were becoming available, that were to win over his critics and to establish his reputation as one of the greatest of theoretical physicists. The emergence of quantum theory, together with the experimental exploration and detailed mapping of the structures of atoms, brought to the microscopic world a reality that, although out of joint with the familiar, was compelling and essentially indisputable. When that had been achieved, no one could seriously deny the existence of atoms, even though they appear to behave in a manner that at first sight (and still to some) seemed strange. Now we have techniques that show both individual atoms (below) and atoms strung together into molecules (on right). The fundamental basis of Boltzmann’s viewpoint has been established beyond reasonable doubt, even though that microscopic world is far more peculiar than even he envisaged.

A photograph of atmos, (specifically of zirconium and oxygen in zirconia).#

A computer-generated image of fragments of DNA, the genetic coding molecule in the nuclei of cells.#

The aims adopted and the attitudes struck by Carnot and by Boltzmann epitomize thermodynamics. Carnot traveled toward thermodynamics from the direction of the engine, then the symbol of industrialized society: his aim was to improve its efficiency. Boltzmann traveled to thermodynamics from the atom, the symbol of emerging scientific fundamentalism: his aim was to increase our comprehension of the world at the deepest levels then conceived. Thermodynamics still has both aspects, and reflects complementary aims, attitudes, and applications. It grew out of coarse machinery; yet it has been refined to an instrument of great delicacy. It spans the whole range of human enterprise, covering the organization and deployment of both resources and ideas, particularly ideas about the nature of change in the world around us. Few contributions to human understanding are richer than this child of the steam engine and the atom.

The Laws of Thermodynamics#

The name thermodynamics is a blunderbuss term originally denoting the study of heat, but now extended to include the study of the transformations of energy in all its forms. It is based on a few statements that constitute succinct summaries of people’s experiences with the way that energy behaves in the course of its transformations. These summaries are the Laws of thermodynamics. Although we shall be primarily concerned with just one of these laws, it will be useful to have at least a passing familiarity with them all.

There are four Laws. The third of them, the Second Law, was recognized first; the first, the Zeroth Law, was formulated last; the First Law was second; the Third Law might not even be a law in the same sense as the others. Happily, the content of the laws is simpler than their chronology, which represents the difficulty of establishing properties of intangibles.

The Zeroth Law was a kind of logical afterthought. Formulated by about 1931, it deals with the possibility of defining the temperature of things. Temperature is one of the deepest concepts of thermodynamics, and I hope this book will sharpen your insight into its elusive nature. As time is the central variable in the field of physics called dynamics, so temperature is the central variable in thermodynamics. Indeed, there are several amusing analogies between time and temperature that go deeper than the accidents that they both begin with and are represented by the same letter. For now, however, we shall regard temperature as a refinement and quantitative expression of the everyday notion of “hotness”.

The First Law is popularly stated as “Energy is conserved”. That it is energy which is conserved, not heat, was the key realization of the 1850 s, and the one that Kelvin and Clausius presented to the world. Indeed, the emergence of energy as a unifying concept was a major achievement of nineteenth-century science: here was a truly abstract concept coming into a dominant place in physics. Energy displaced from centrality the apparently more tangible concept of “force”, which had been regarded as the unifying concept ever since Newton had shown how to handle it mathematically a century and a half previously.

Energy is a word so familiar to us today that we can hardly grasp either the intellectual Everest it represents or the conceptual difficulty we face in saying exactly what it means. (We face the same difficulty with “charge” and “spin” and other fundamental familiarities of everyday language.) For now, we shall assume the concept of energy is intuitively obvious, and is conveyed adequately by its definition as “the capacity to do work”. The shift in the primacy of energy can be dated fairly accurately. In 1846 Kelvin was arguing that physics was the science of force. In 1847 he met and listened to Joule. In 1851 he adopted the view that, after all, physics was the science of energy. Although forces could come and go, energy was here to stay. This concept appealed deeply to Kelvin’s religious inclinations: God, he could now argue, endowed the world at the creation with a store of energy, and that divine gift would persist for eternity, while the ephemeral forces danced to the music of time and spun the transitory phenomena of the world.*

A mischievous cosmologist might now turn this argument on its head. One version of the Big Bang, the inflationary scenario, can be interpreted as meaning that the total energy of the Universe is indeed constant, but constant at zero! The positive energy of the Universe (largely represented by the energy equivalent of the mass of the particles present, that is, by the relation

Kelvin hoped to raise the concept of energy beyond what it was becoming in the hands of the mid-nineteeth-century physicists, a mere constraint on the changes that a collection of particles could undergo without injection of more energy from outside. He hoped to establish a physics based solely on energy, one free of allusions to underlying models. He had a vision that all phenomena could be explained in terms of the transformations of energy, and that atoms and other notions were to be regarded merely as manifestations of energy. To some extent modern physics appears to be confirming his views, but it is doing so in its typical slippery way: without doing away with atoms!

The Second Law recognizes that there is a fundamental dissymmetry in Nature: the rest of this book is focused on that dissymmetry, and so we shall say little of it here. All around us, though, are aspects of the dissymmetry: hot objects cool, but cool objects do not spontaneously become hot; a bouncing ball comes to rest, but a stationary ball does not spontaneously begin to bounce. Here is the feature of Nature that both Kelvin and Clausius disentangled from the conservation of energy: although the total quantity of energy must be conserved in any process (which is their revised version of what Carnot had taken to be the conservation of the quantity of caloric), the distribution of that energy changes in an irreversible manner. The Second Law is concerned with the natural direction of change of the distribution of energy, something that is quite independent of its total energy.

The Third Law of thermodynamics deals with the properties of matter at very low temperatures. It states that we cannot bring matter to a temperature of absolute zero in a finite number of steps. As I said earlier, the Third Law might not be a true Law of thermodynamics, because it seems to assume that matter is atomic, whereas the other Laws are summaries of direct experience and are independent of any such assumption. There is thus a difference of kind between this Law and the others, and even its logical implications seem less securely founded than theirs. We shall touch on it again, but only much later.

These, then, in broad and indistinct outline, are the Laws that stake out our domain: we have identified the territory; we shall proceed to explore its details. The Laws present us, however, with an immediate problem: thermodynamics is an intrinsically mathematical subject. Clausius’s remarkably elegant functional thermodynamics is a collection of mathematical relations between observations; but with the relations gone, so too is the subject. Boltzmann’s beautiful statistical thermodynamics (some of which is carved on his tombstone) also consists of its equations; without them, we the principal reason why it remains so daunting. the principal reason why it remains so daunting.

Nevertheless, the subject is so important, and the implications of the Second Law so profound and far-reaching, that it seems worth the effort to discover a loophole in its mathematical defenses. What we shall do in the following pages, therefore, is attempt to explore thermodynamics without the mathematics. Then we shall not have the pain (a pain that many rightly regard as at least half the pleasure) of the mathematics. Although we shall necessarily remain outside the subject itself, we shall be able to share the insights it provides into the workings of the world.

But shall we remain so very much outside? Shall we be merely outsiders, tourists, while the real activities go on inside? A more optimistic attitude (and one applicable to other fields as well) is to take the view that mathematics is only a guide to understanding, a refiner of arguments and a purifier of comprehension, and not the endpoint of explanation. If that is so, then the people within are the unlucky toilers who are merely working to sharpen our wits. Whichever position you adopt, I hope the following pages will add something to your view of the world.

Revolutions of Dissymmetry#

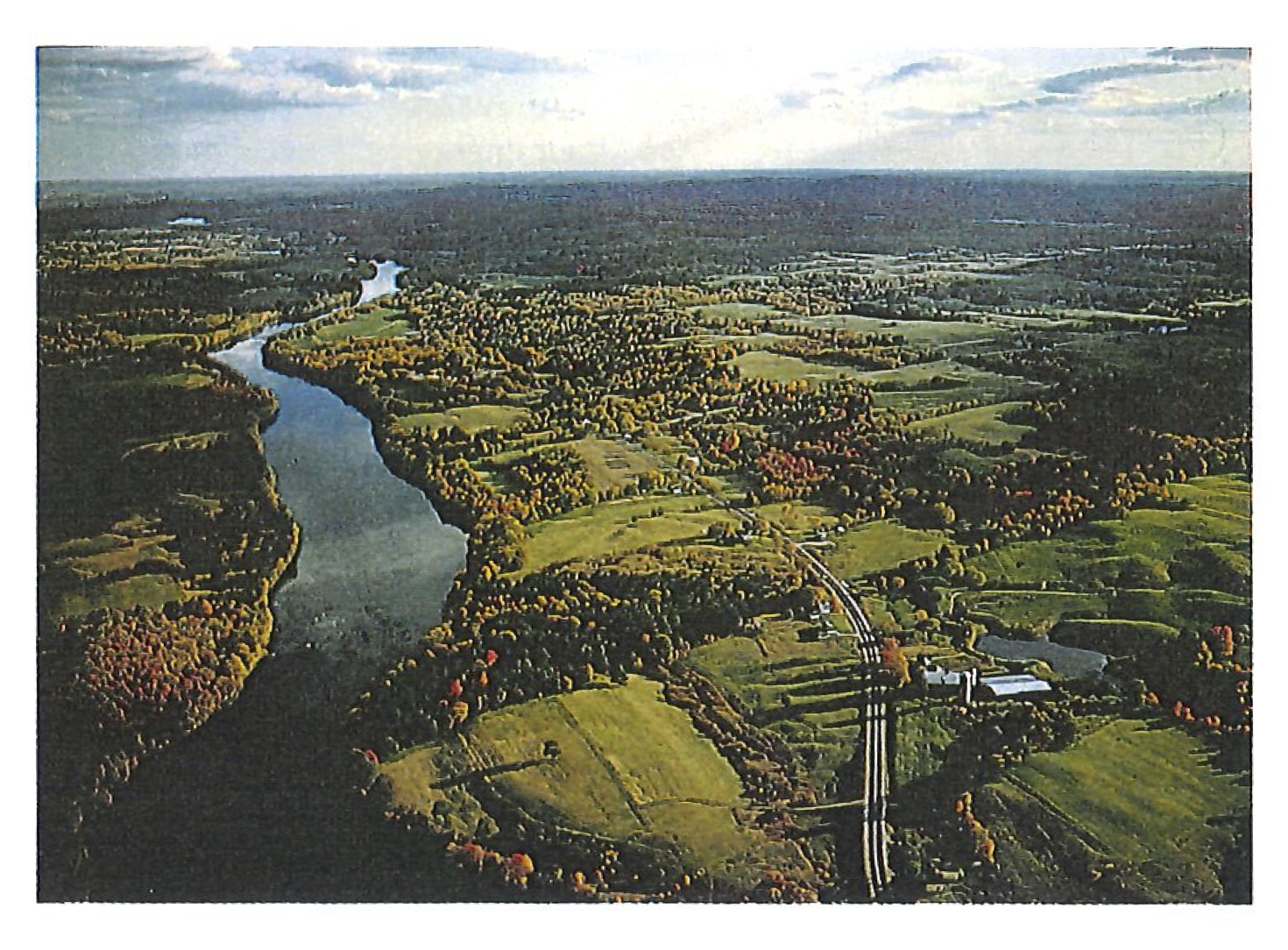

An intrinsic dissymmetry of Nature is reflected in our technological history. The conversion of stored energy and of work into heat has been commonplace for thousands of years. However, the widespread mastery of the reverse, the controlled conversion of heat and stored energy into work; dates principally from the industrial revolution. I say “principally” because work has, of course, been achieved for centuries. The conversion of wind which is essentially a store of energy supplied by the Sun-into the motion of mills and ships is one example of such a conversion. The use of animals is another, even more indirect procedure with the same overall result. But we can regard the industrial revolution as the surge of activity released by humanity’s sudden discovery of how to exploit energy, how to convert heat into work at will, so that changes in society were no longer limited by using animals to do work and by the one-sided processes of Nature.

Primitive people learned to produce heat at will and in abundance by burning fuels. Then, apart from reliance on such natural sources as winds and oxen, it took people thousands of years to discover the much more sophisticated procedures by which the energy in fuels could be converted into work (other than by feeding the fuels as food to cattle, horses, and slaves). The founders of the industrial revolution mastered the production of work in abundance and at will.

An open hearth is a primitive way of realising the energy stored in fuels.#

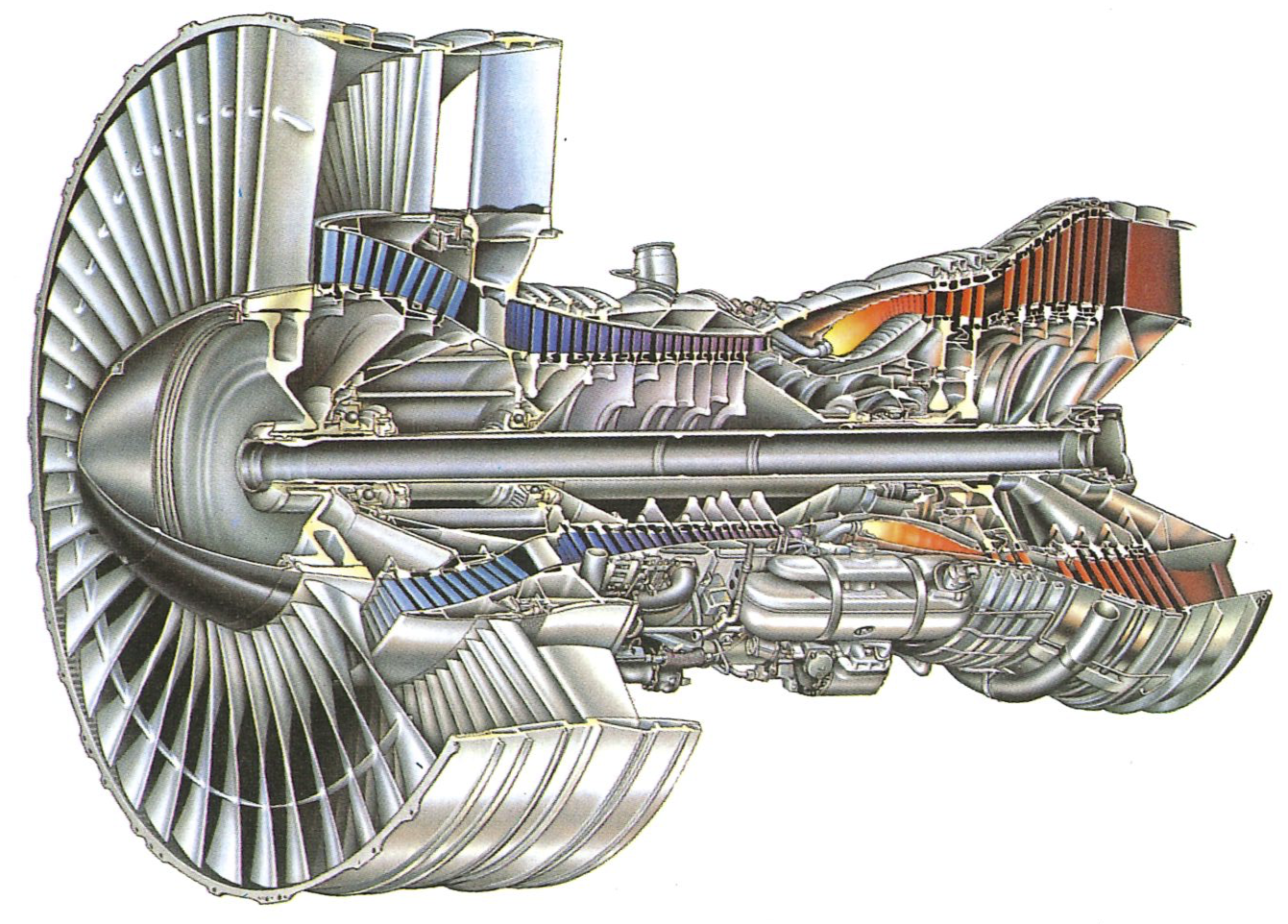

On the other hand, a jet engine, which extracts the energy of fuels as work, is much more complicated.#

The differences in the degrees of sophistication needed to produce heat, on the one hand, and work, on the other, from the same fuel are apparent as soon as we look at the equipment each process requires. In order to produce heat from a fuel, all we need is an open hearth (below), on which the unconstrained combustion of the fuel-wood, coal, or animal and vegetable oils-produces more or less abundant heat. In order to achieve work, we need a much more complicated device (above). Primitive people used the heat of their simple hearths to unlock the elements from the Earth, and from them gradually built the basis of civilization.

Although early minds were unaware of it, what their fires were releasing was the energy trapped from the Sun. (It is fitting, but coincidental. that many should have worshipped the Sun too.) At first the demands on civilization were slight, and could be satisfied by the energy which the sun had shone down in recent years, and which had been stored in the annul growth of vegetation. But as civilization progressed, trapped solar energ that had been accumulated in former ages was increasingly exploited, and wood gave way to coal as the principal fuel. Nevertheless, this was not 2 technological revolution, for all that was happening was that people were mining further back in time, and retrieving the energy trapped from the Sun in an earlier epoch.

Modern civilizations continue this quest to mine the past for the har vest laid down in earlier times. Now we exploit the great stores of oil, the partially decayed remnants of marine life (which also drew its initial sustenance from the Sun). But such are our demands that we have been forced to dig beyond that time and collect the harvest of other stars. For example, the atoms of uranium we now burn in the complex hearths of nuclear reactors are the rich ashes of former stars. These atoms were formed in the death throes of early generations of stars, when light atoms were hurled against each other with such energy that they fused into progressively heavier ones. The old stars exploded, sloughing off the atoms and spreading them through space, to go through other roastings, explosions, and dispersals, until in due course they collected in the ball of rock we stand on and mine today.

But the quest for fire from the past goes on even deeper. Now we seek to mine beyond the formation of the Earth, beyond the deaths of generations of stars, and into the ash of the creation itself.

In the earliest moments of the Universe, the Big Bang shook spacetime to its foundations, and conditions of almost inconceivable tumult raged through the swelling cosmos; yet this great cataclysm managed to produce only the simplest atoms of all. The labor of the cosmic elephant resulted in the birth of a cosmic mouse: out from the tumult dropped hydrogen with a dash of helium. These elements, still superabundant, are the ashes of the Big Bang, and our attempts to achieve the controlled fusion of hydrogen into helium are aimed at capturing the energy they still store. Hydrogen is the oldest fossil fuel of all: when we master fusion, we shall be mining at the beginning of time.

The emergence and flourishing of civilizations has thus been characterized by our mining progressively further into the past for convenient, concentrated supplies of energy. Mining deeper in time, however, is merely an elaboration of the primitive discovery that energy can be unleashed as heat. However sophisticated the hearth, the combustion of fossils, whether of vegetation, stars, or the Big Bang, is merely a linear series of refinements of the basic discovery of combustion. Such refinements are not in themselves revolutions: they are sophistications-qualitative extensions-of processes that are almost as old as the hills.

Without the revolution that comes about from exploring the other side of Nature’s dissymmetry, the conversion of heat into work, we would merely be warmer, not wiser. This other side lets us tap the store of energy in fuel and extract from it motive power. Then, with motive power we can make artifacts, we can travel, and we can even communicate without traveling. Why, though, did this dissymmetry take so long to exploit? The task confronting humanity was to find a way to extract ordered motion from disordered motion, for therein lies the difference between heat and work. This is the moment when we must look more closely at the nature of the dissymmetry, and bring ourselves forward from the time before Carnot to the comprehension that came with Clausius and Kelvin.

The Identification of the Dissymmetry#

We shall use a steam engine to identify Nature’s dissymmetry. This is essentially what Carnot did. We shall then step inside the engine, so to speak, and discover the atomic basis of the dissymmetry of events. That is what Clausius identified and Boltzmann developed.

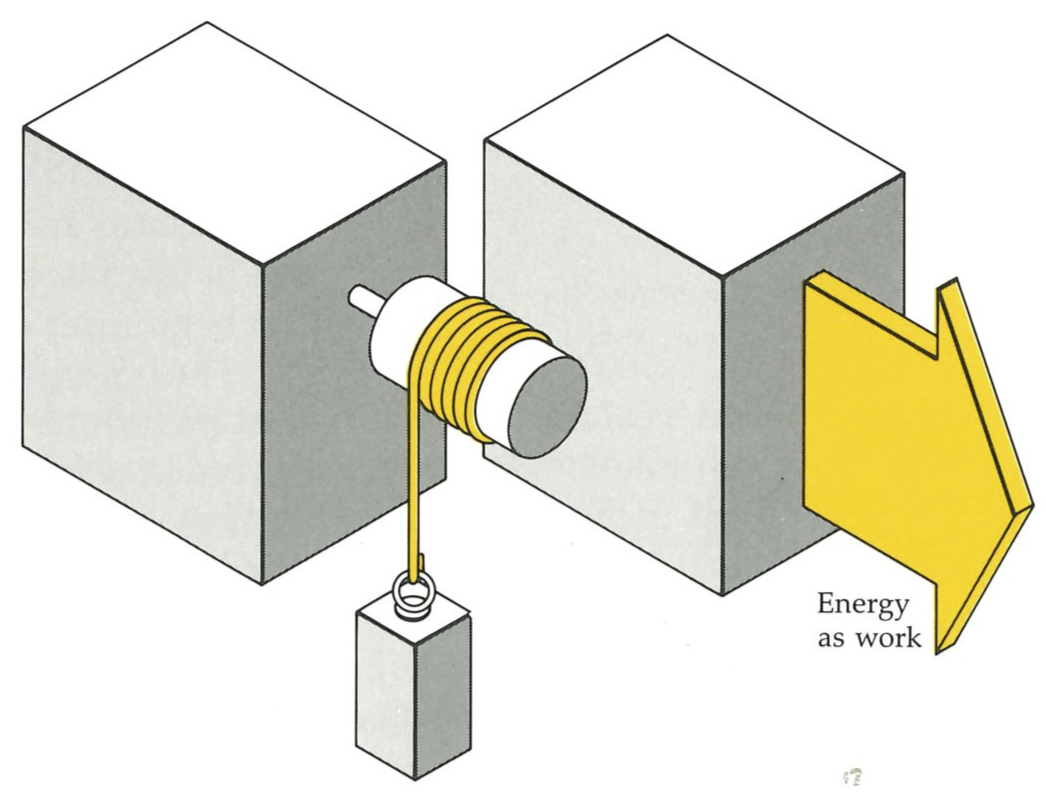

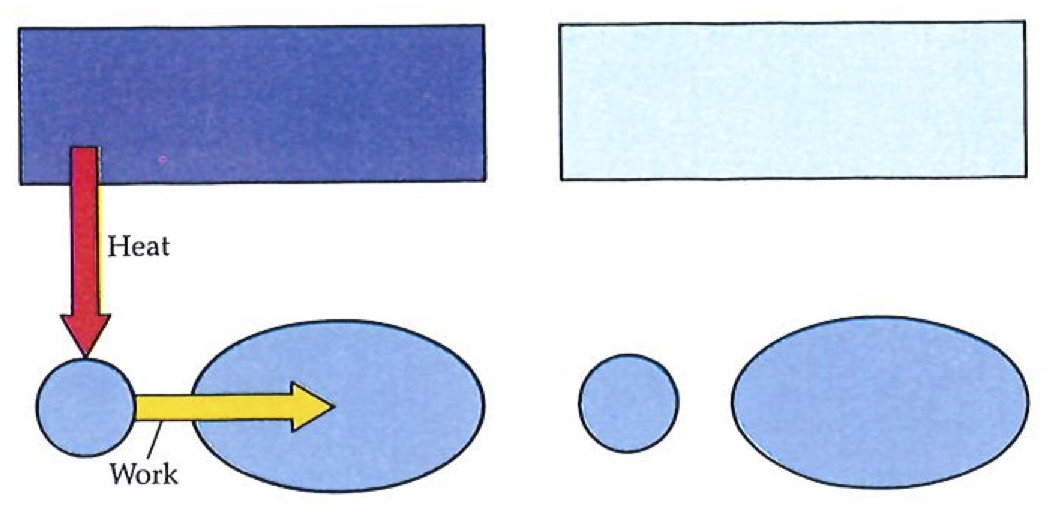

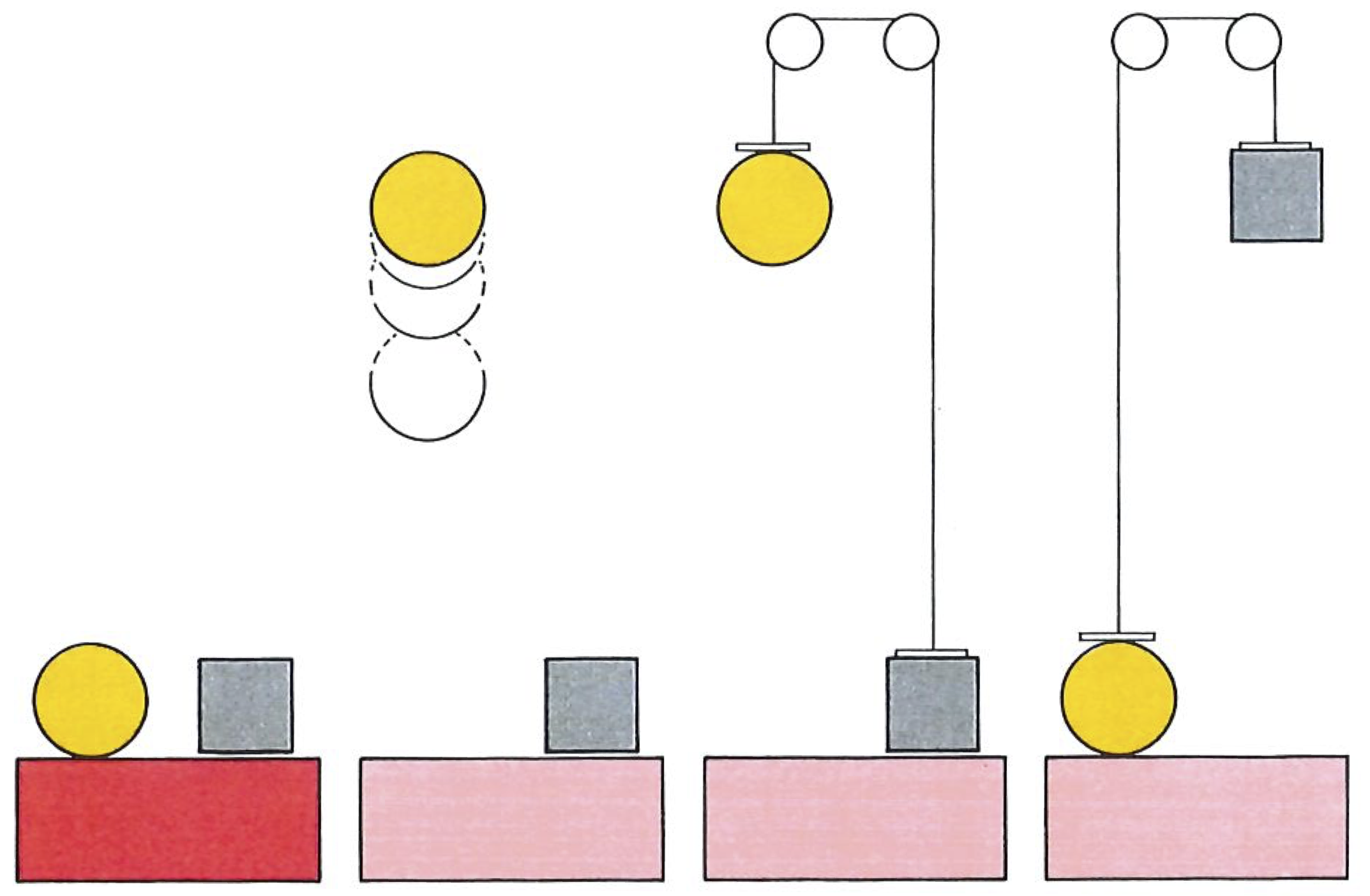

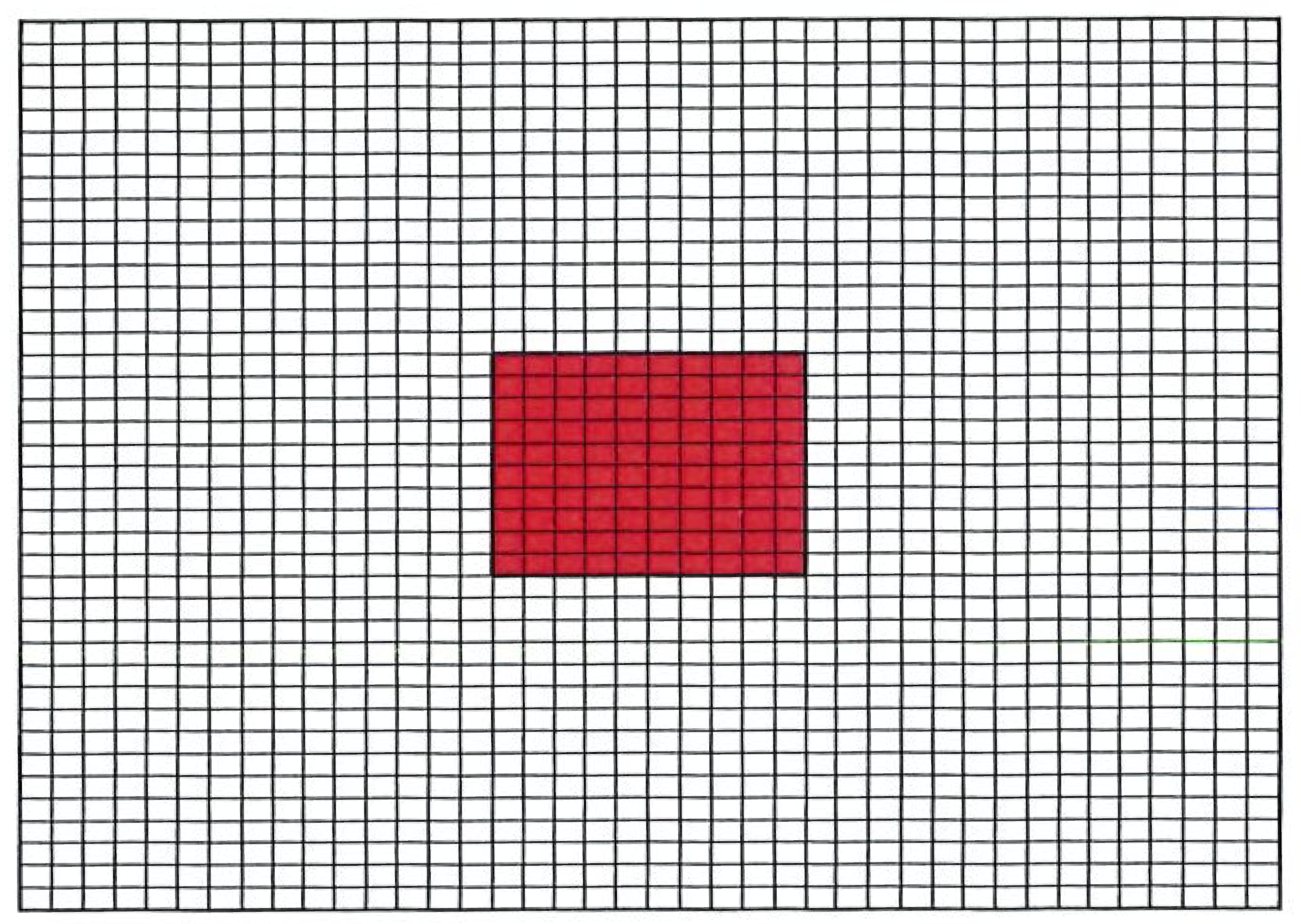

An engine is something that converts heat into work. Work is a process such as raising a weight (below). Indeed, we shall define work as any process that is equivalent to the raising of a weight. Later, as this story develops, we shall use our increased insight to build more general definitions and find the most all-embracing definition right at the end. That is one of the delights of science: the more deeply a concept is understood, the more widely it casts its net. Heat we shall come to later.

An engine should be capable of operating indefinitely, and to go on making the conversion for as long as the factory operates or for as long as the journey lasts. Single-shot conversions, such as the propulsion of a cannonball by the combustion of a charge of powder, produce work, but are not engines in this sense. An engine is a device that operates cyclically, and returns periodically (once in each revolution, or once in several revolutions, of a crankshaft, for instance) to its initial condition. Then it can go on, in principle, for ever, living off the energy supplied by the hot source which in turn is supplied with energy by the burning fuel.

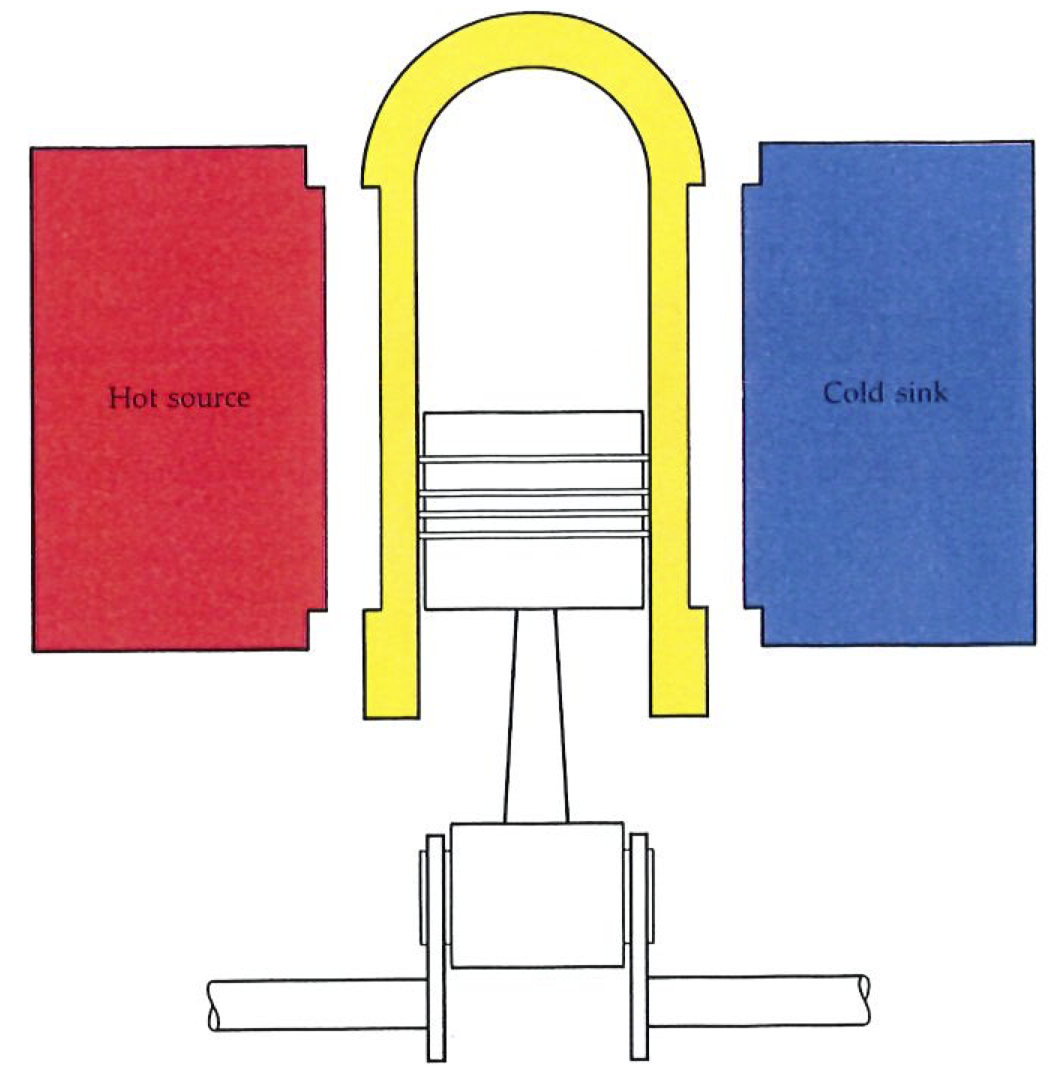

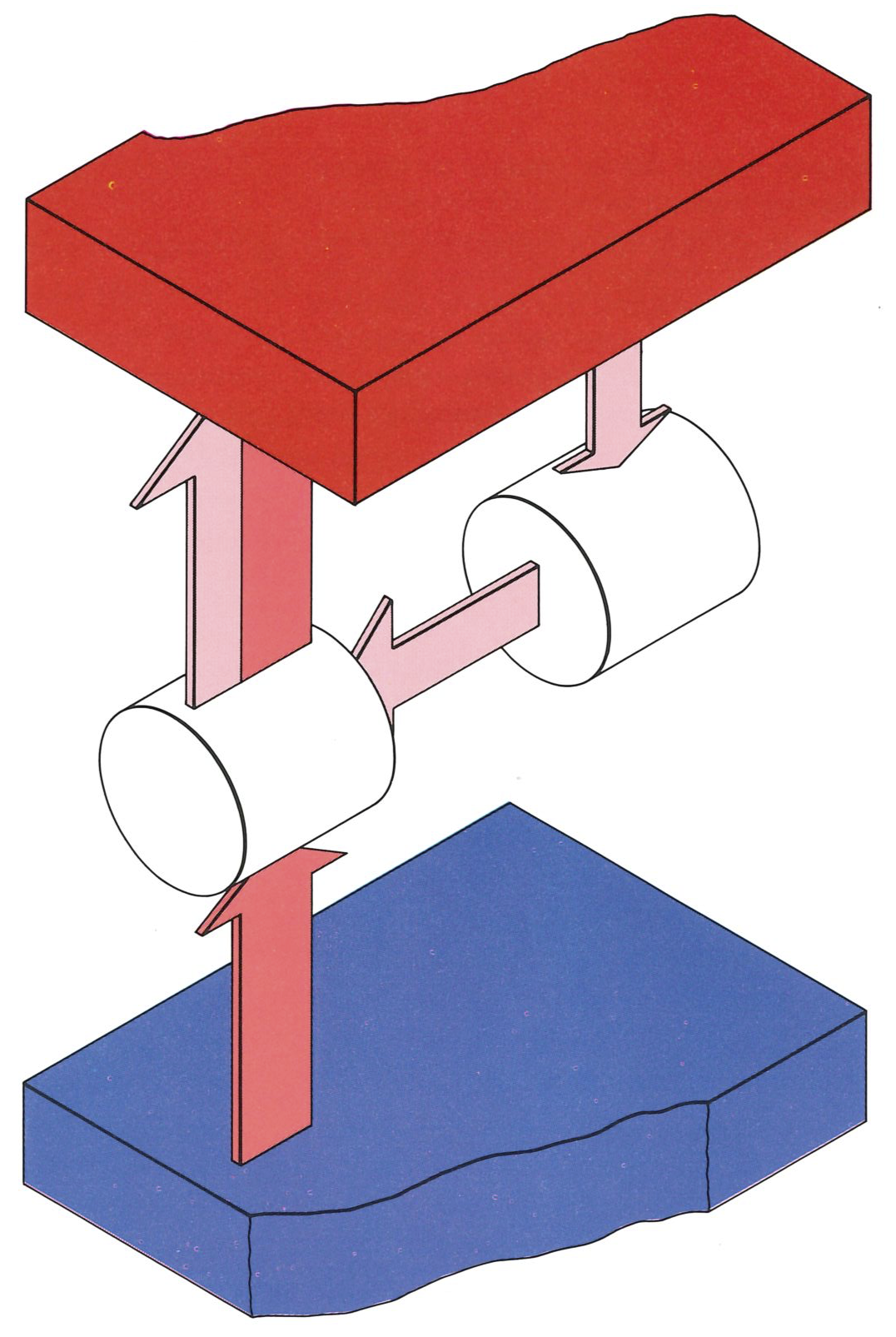

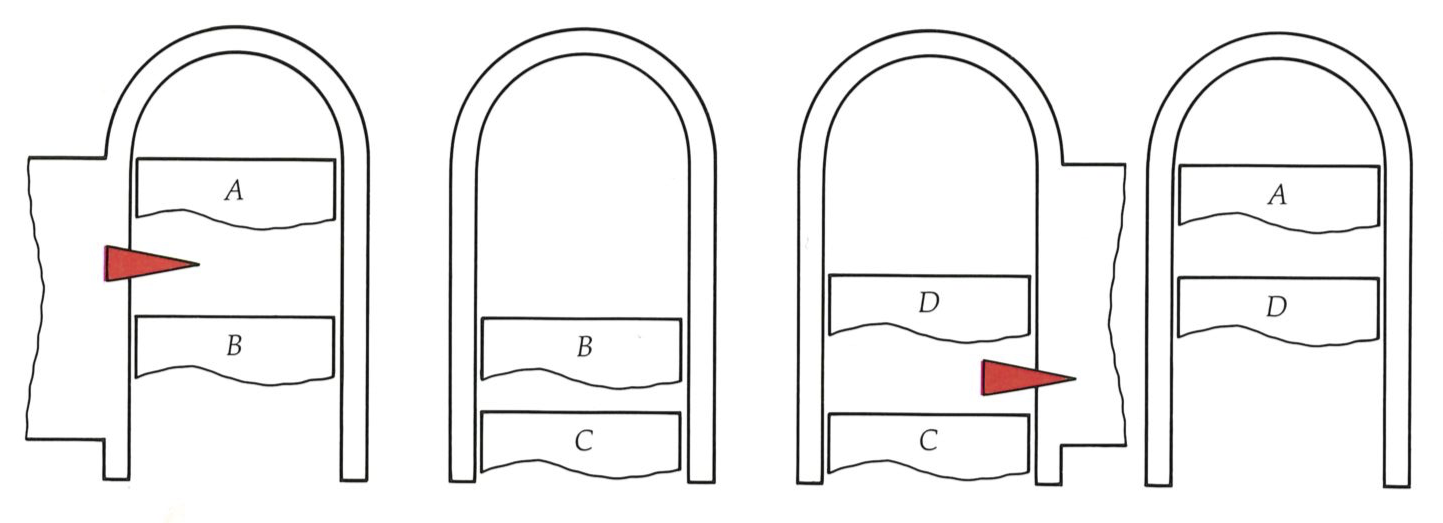

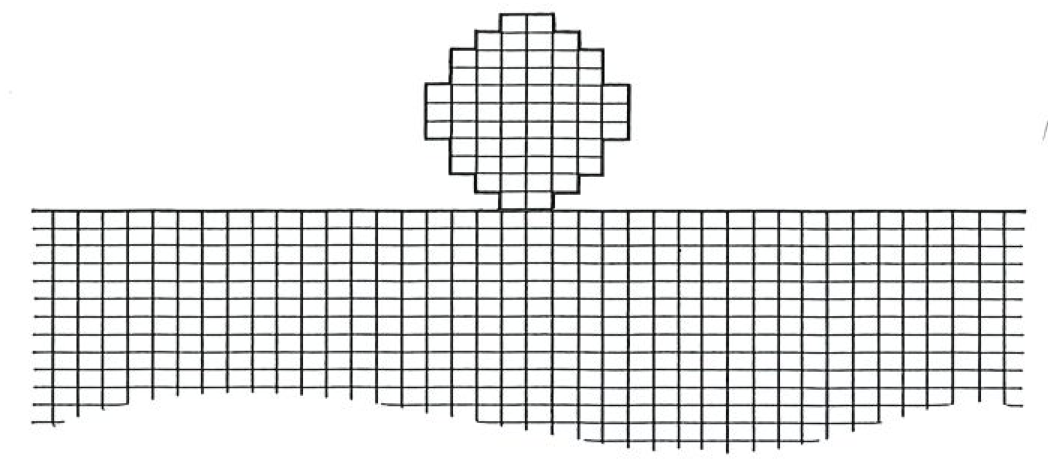

Engines and the cycles they go through in the course of their operation may be as intricate as we please. A sequence of steps known as the Carnot cycle is a convenient starting point. The cycle is an abstract idealization, and very simple. Nevertheless, it may be elaborated (as we shall see later) to reproduce the stages that real engines such as gas turbines and jet engines go through, and it captures the essential feature of all engines. The engine itself (as illustrated below) consists of a gas trapped in a cylinder fitted with a piston. The cylinder can be put in contact with a hot source (steam from a boiler) and with a cold sink (cooling water), or it may be left completely insulated. Note that the operation does not capture the actual working of a steam engine, because in the Carnot engine steam is not admitted directly into the cylinder.

We can follow the course of the engine as it goes through its cycle by following the pressure changes inside the cylinder. A diagram showing the pressure at each stage is called an indicator diagram. Indicator diagrams were used by James Watt, but he kept them a trade secret; the French scientist Emile Clapeyron introduced them into the discussion of the Carnot cycle. Carnot indeed has a deep debt of gratitude to Clapeyron, for not only did the latter refine his cycle, make a mathematical analysis of it, and portray it in terms of an indicator diagram, but it was Clapeyron’s paper “Mémoire sur la puissance motrice du feu” (yet another variation on the theme), published in 1834, that kept Carnot’s work alive and brought it to the attention of others, particularly of Kelvin.

The Carnot engine consists of a working gas confined to a cylinder which may be put in thermal contact with hot or cold reservoirs, or thermally insulated, at various stages of the cycle of operations. Each stage of the cycle is performed quasistatically (infinitely slowly), and in a manner which ensures that the maximum amount of work is extracted. There are no losses arising from turbulence, friction, and so on.

Emile Clapeyron (1799-1864)#

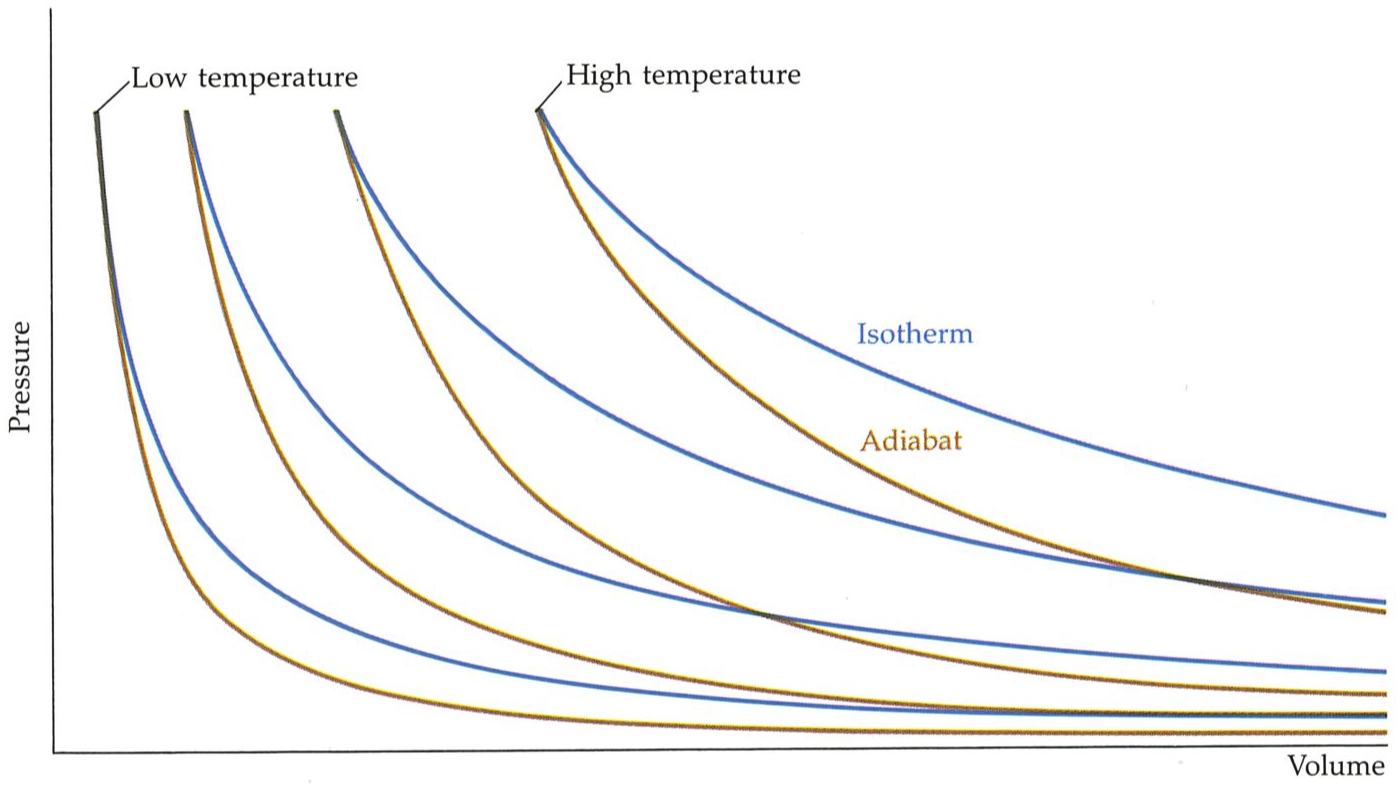

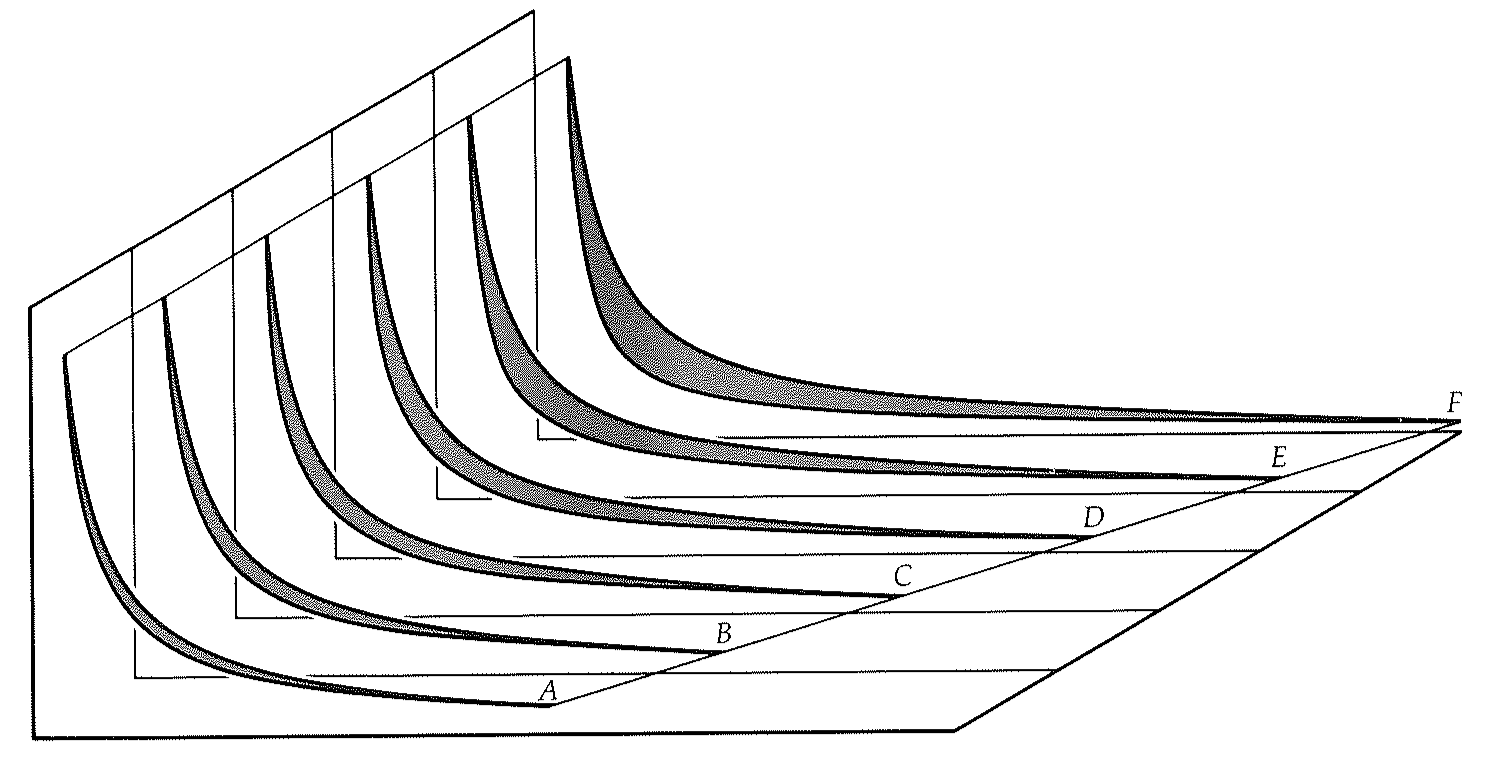

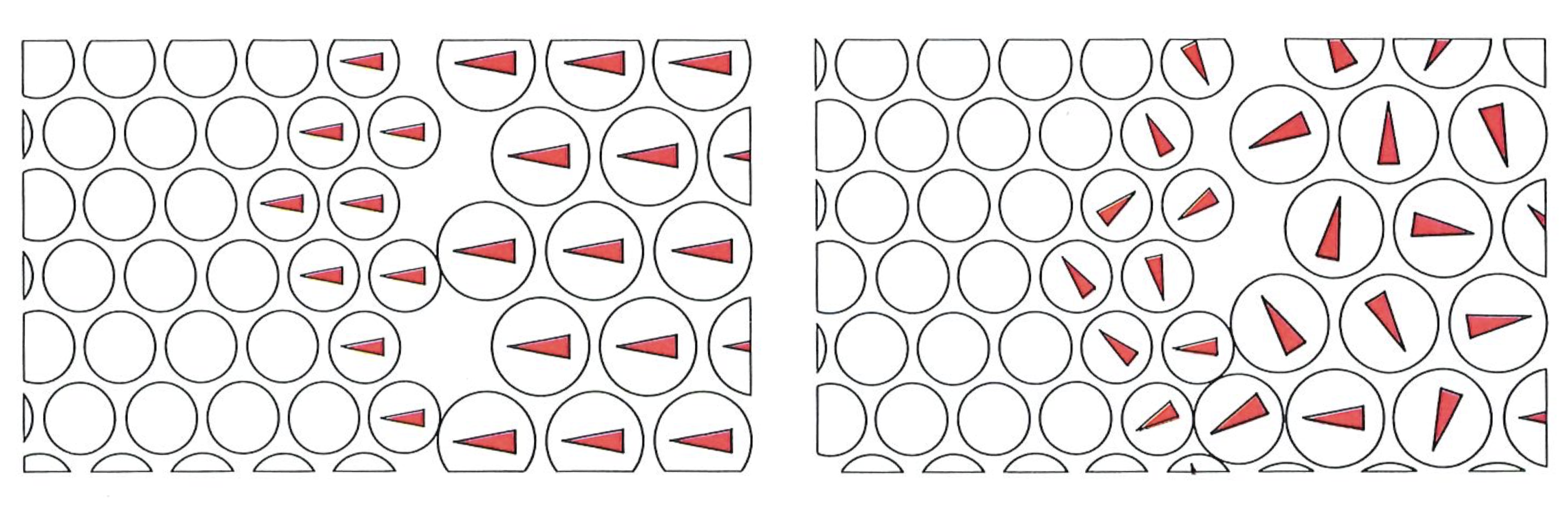

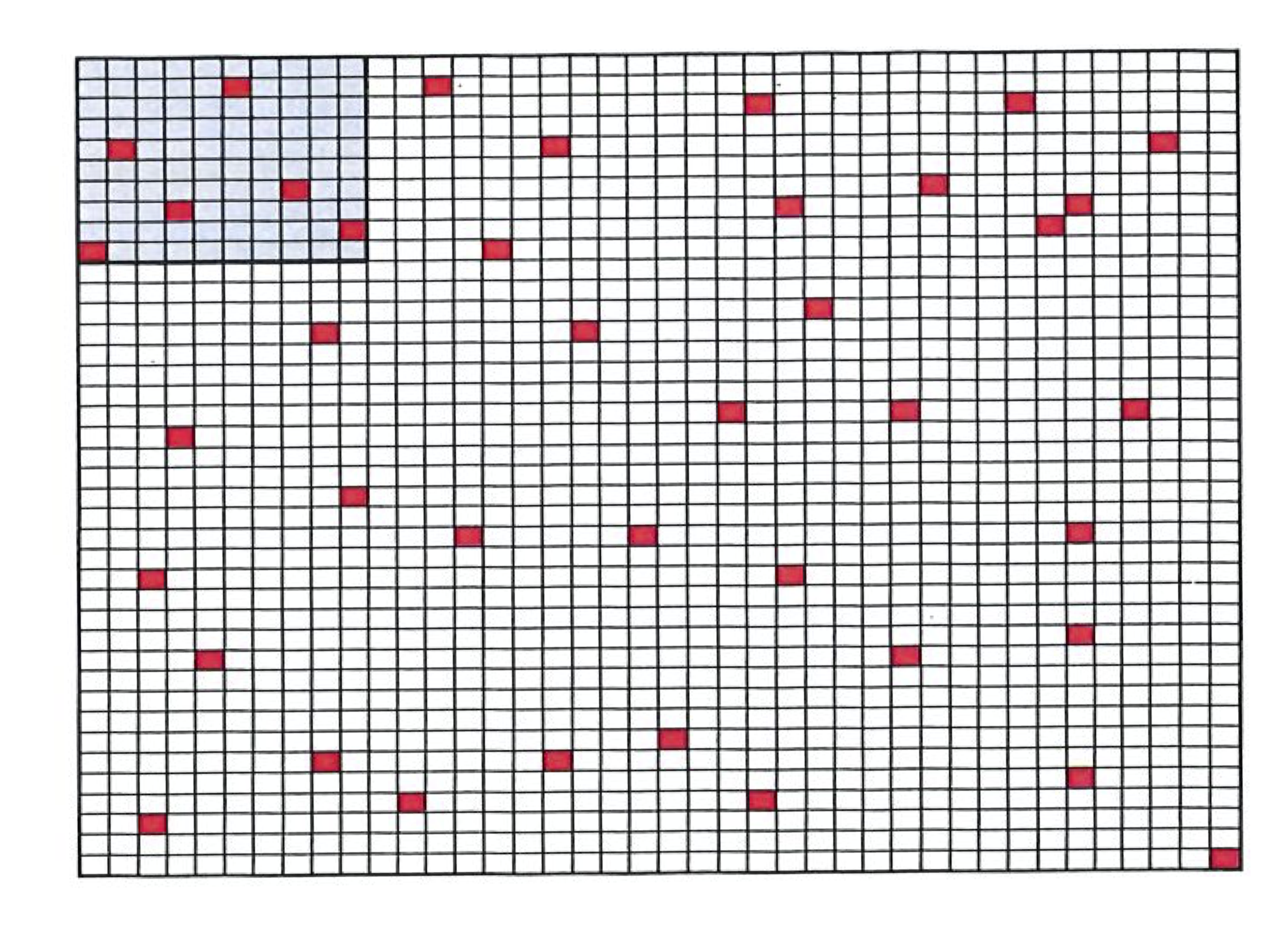

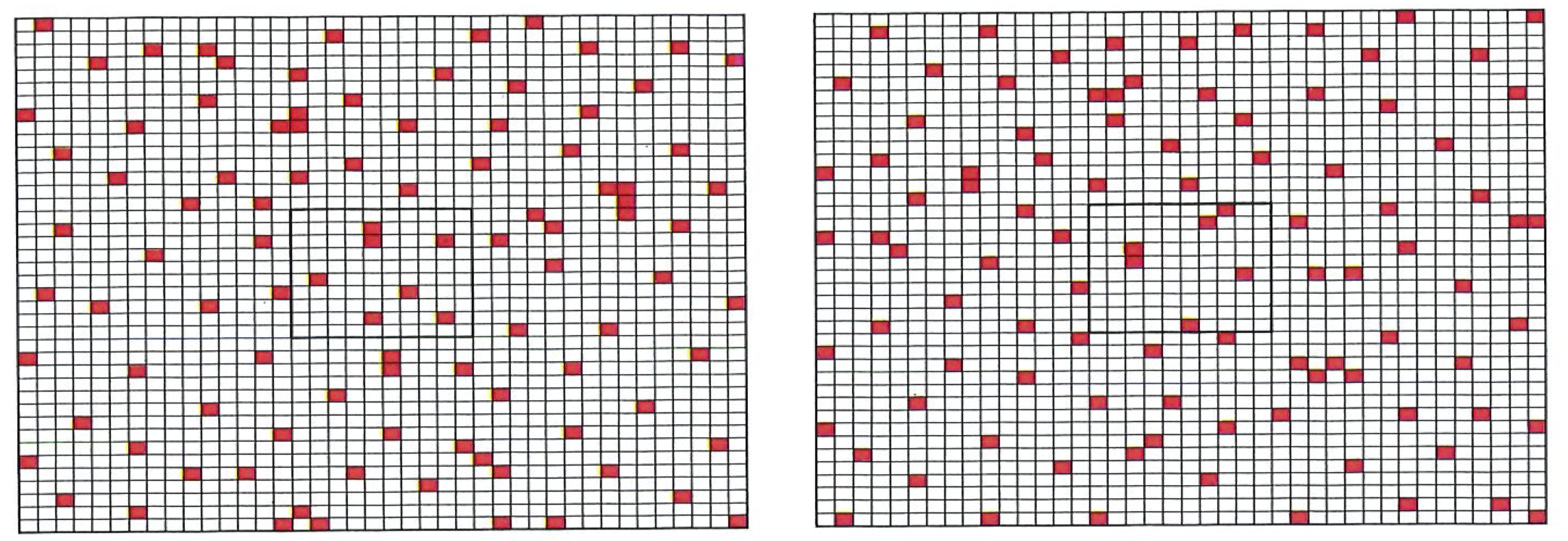

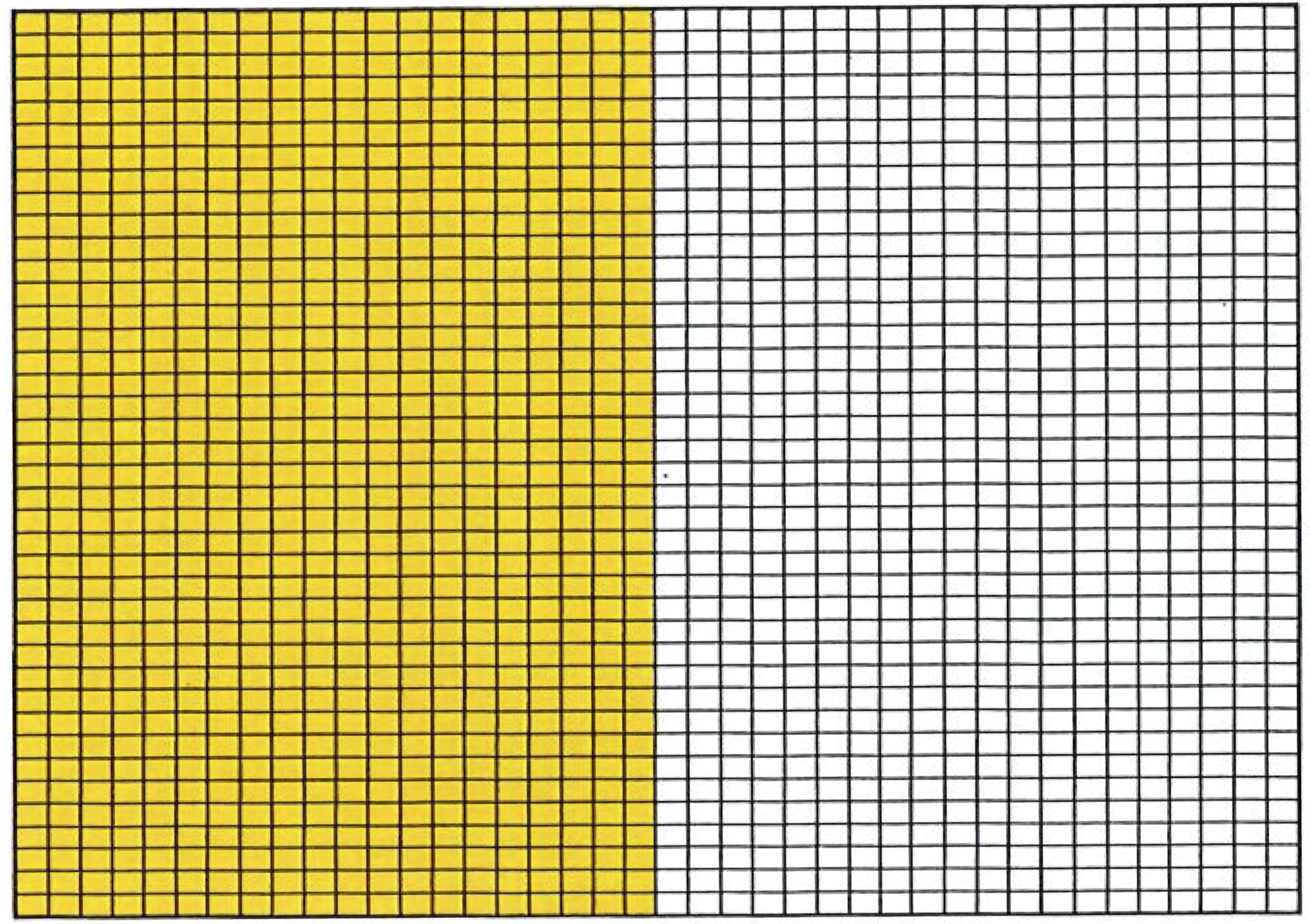

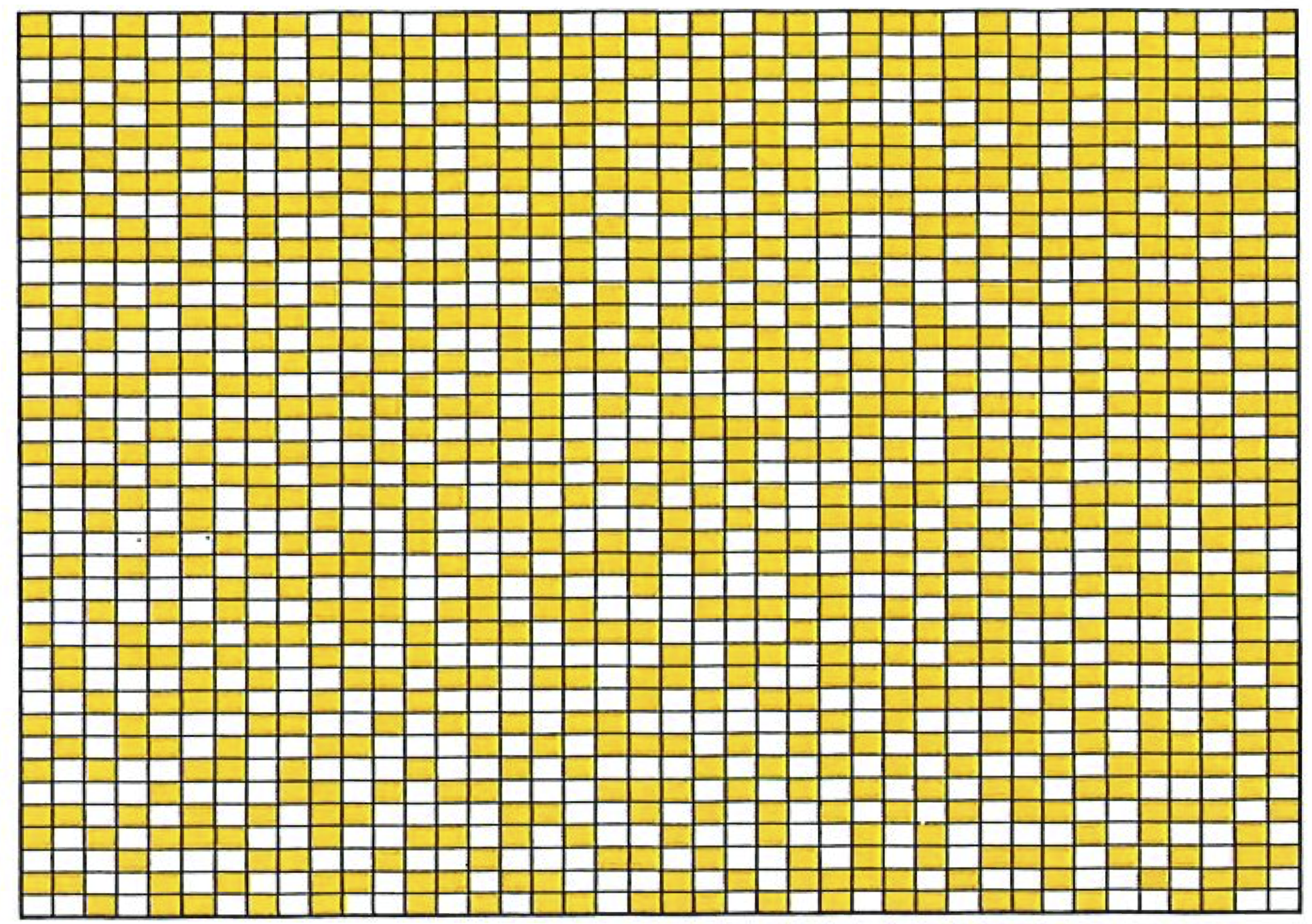

In order to follow the engine through its cycle, we need to know some elementary properties of gases. The first is that as a given amount of gas is confined to ever-smaller volumes (as a piston is driven in), then its pressure increases. The magnitude of the increase depends on how the compression is carried out. If the gas is kept in contact with a heat sink (a) thermal reservoir) of some kind (for instance, a water bath or a great block of iron), then its temperature remains the same, and the compression is called isothermal. Under these circumstances the rise in pressure follows one of the curves (isotherms) shown in the figure on the facing page. (These isotherms are mathematically hyperbolas,

The experimental observation is that during an adiabatic commpression the temperature of a gas rises. (We shall see the atomic reason tor that later; for now, and throughout this chapter, we are keeping to the world of appearances and not delving into mechanisms.) The rise in temperature of the gas amplifies the rise in pressure that results from the confinement itself (because pressure increases with temperature); so, during an adiabatic compression, the pressure of a gas rises more sharply than during an isothermal compression (as is also illustrated above).

The relation between the pressure and the volume of a gas depends on the conditions under which the expansion or compression takes place. It the temperature is held constant, the relation is expressed by Boyle’s law that the pressure is inversely proportional to the volume: this gives res to the isotherms in the illustration. On the other hand, if the sample is thermally isolated. its temperature rises as it is compressed (and falls as it expands), and the dependence is as show by the adiabats.#

The increasing pressure of a gas as its volume is reduced isothermally, and the even sharper increase when the compression is adiabatic, are reversed when the gas expands. If the expansion is isothermal, then the pressure drops as the volume increases; if the expansion is adiabatic, then the pressure falls more sharply because the gas also cools. This is also shown in the figure above, which therefore summarizes most of the essential features of a gas.

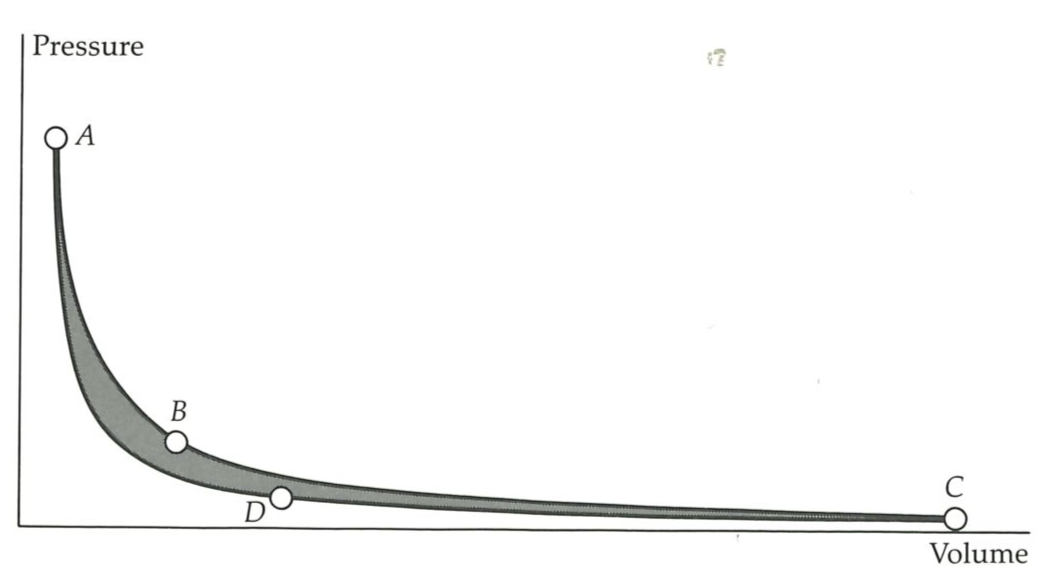

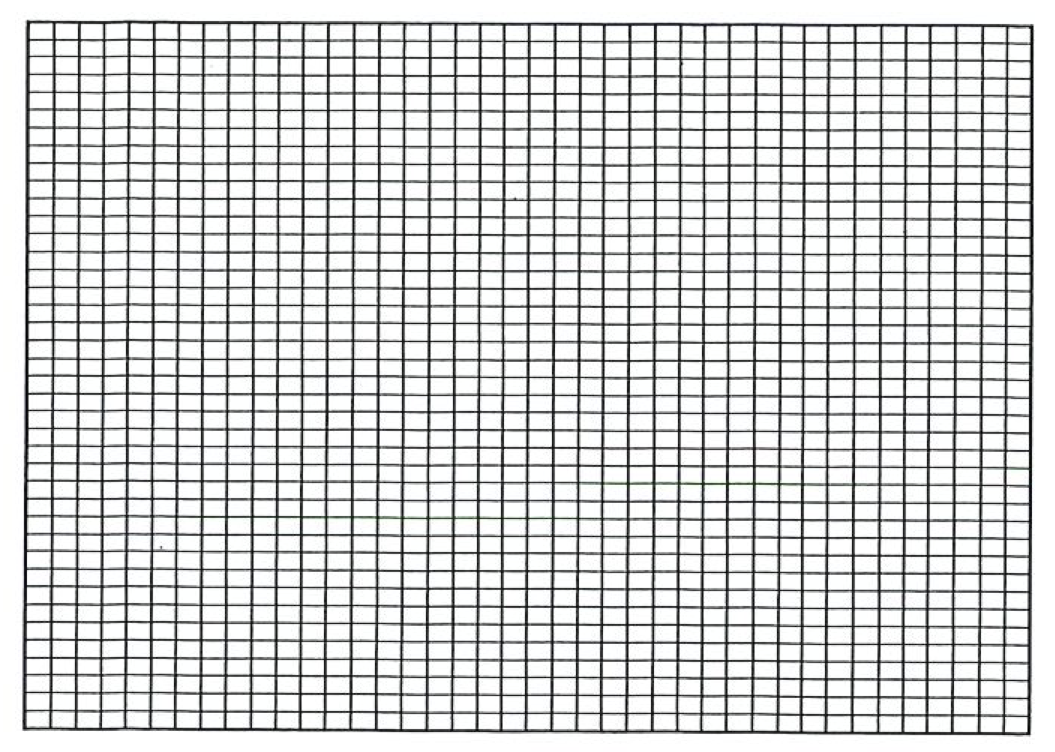

The four steps of the Carnot cycle are illustrated at the top of the next page, and the behavior of the pressure of the confined gas is illustrated in the indicator diagram at the bottom of the next page. The horizontal axis in the latter represents the location of the piston, but because the cylinder is uniform it also represents the volume available to the gas; so from now on we shall interpret it as the volume.

The initial state of the engine is represented by

The Carnot cycle consists of four stages: A to

The first stage of the cycle is the expansion of the gas while the cylinder remains in contact with the hot source. The high-pressure gas pushes back the piston, and so the crank rotates. This is a power stroke of the engine. This step is isothermal (all at the same temperature); so, in order to overcome the tendency of the gas to cool as it expands, energy must flood in from the hot source. Therefore, not only is this the power stroke of the engine, it is also the step that sucks in energy-absorbs heat-from the hot source.

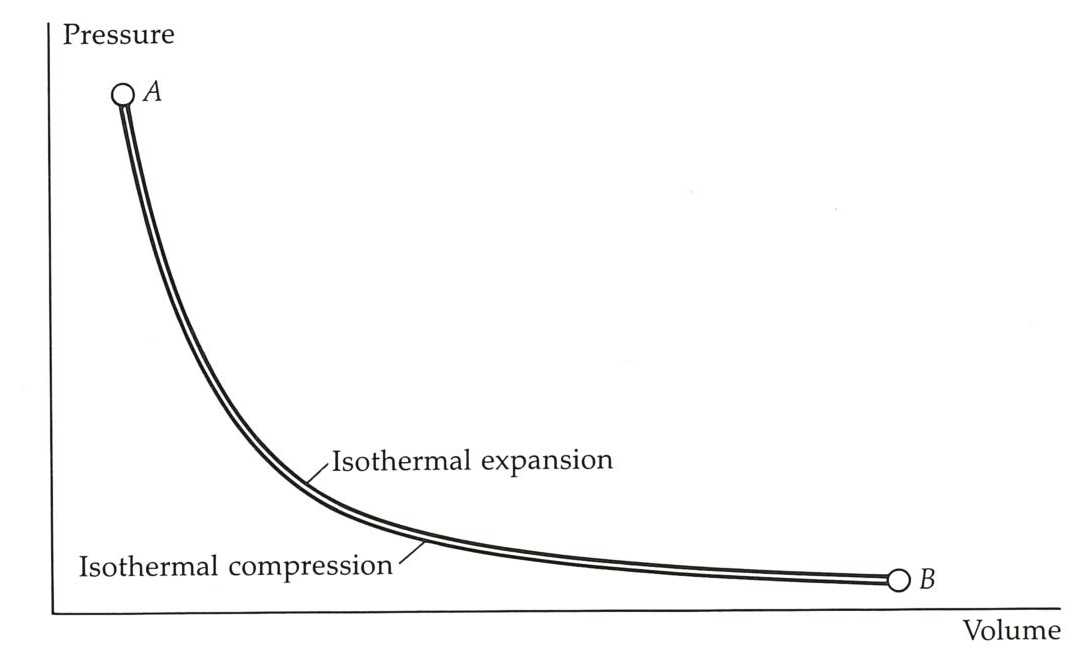

The designer of this engine could allow the crankshaft to continue to rotate, and so to return the piston to its initial position. Then, if the compression is also isothermal, the gas will be restored to its initial state. This would certainly fulfil one criterion: the cycle would be complete, the gas restored to its initial state, and the engine ready to go on again. We shall call this the Atkins cycle. Such a cycle is plainly useless, for in order to push the piston back to its starting position, exactly the same work needs to be done by the external, initially hopeful, but now disappointed user as had been obtained from the engine in the power stroke! This is illustrated in the figure below, where the rotating crankshaft takes the state of the gas from

The indicator diagram for the Carnot cycle.

In order to make the cycle useful, we have to arrange matters so that not all the work of the power stroke is lost in restoring the gas to its initial pressure, temperature, and volume. We need a way to reduce the pressure of the gas inside the cylinder, so that during the compression stage less work has to be done to drive the piston back in. One way of reducing the pressure of a gas is to lower its temperature. That we can do by including in the cycle a stage of adiabatic expansion; we have seen that such an expansion lowers the temperature.

The indicator diagram for the Atkins cycle. The two steps are isothermal, and occur at the same temperature: the cycle is useless, because no work is produced overall.#

The essential step in the Carnot cycle is therefore to break the thermal contact with the hot reservoir before the piston is fully withdrawn, at

At this point we have to begin to restore the gas to its initial condition. The first restoration step involves pushing in the piston-doing workand reducing the volume toward its initial value. This stage (from

This compression takes us to

The Carnot cycle can be explored by using the first computer program listed in Appendix 3. The general definition of a Carnot cycle is any cycle in which there are two adiabatic and two isothermal stages.

Not only is the cycle complete, and the engine back exactly in its initial condition and ready to go through another, but work has been produced. As we set out to achieve, more work was produced in the power strokes than was absorbed in the restoration stages, because the compression work has been done against a lower pressure. This is reflected in the shape of the indicator diagram at the bottom of page 18: it now encloses nonzero area, and the work done by the engine overall is also nonzero.

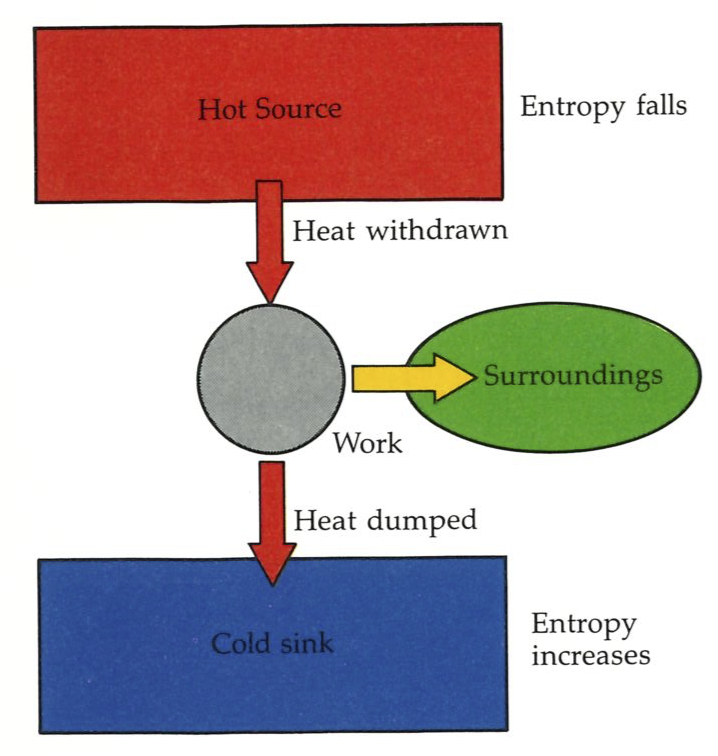

But there is an exceedingly important point. Notice the importance of the cold sink. Without the cold sink we have the primitive and useless Atkins cycle, a sequence that is cyclic but workless. As soon as we allow energy to be discarded into a cold sink, the lower line of the indicator diagram drops away from the upper, and the area that the cycle encloses becomes nonzero: the sequence is still cyclic, but now it is useful. However, the price we have to pay in order to generate work from the heat absorbed from the hot source is to throw some of that heat away. This captures the essence of Carnot’s view that a heat engine is an energy mill (although we have discarded the conservation of caloric): energy drops from the hot source to the cold sink, and is conserved; but because we have set up this flow from hot to cold, we are able to draw only some energy off as work; so not all the energy drops into the cold. The cold sink appears to be essential, for only if it is available can we set up the energy fall, and draw off some as work.

Now we generalize. The Carnot cycle is only one way to extract work from heat. Nevertheless, it is the experience of everyone who has studied engines that, as in the Carnot cycle, in every engine there has to be a cold sink, and that at some stage of the cycle energy must be discarded into it. That little mouse of experience is nothing other than the Second Law of thermodynamics.

Thus the Second Law moves onto the stage. I have allowed it to creep in, because that emphasizes the extraordinary nature of thermodynamics. All the law seems to be saying is that heat cannot be completely converted into work in a cyclic engine: some has to be discarded into a cold sink. That is, we appear to have identified a fundamental tax: Nature accepts the equivalence of heat and work, but demands a contribution whenever heat is converted into work.

Note the dissymmetry. Nature does not tax the conversion of work into heat: we may fritter away our hard-won work by friction, and do so completely. It is only heat that cannot be so converted. Heat is taxed; not work.

The web of events is beginning to form. Bouncing balls come to rest; hot objects cool; and now we have recognized a dissymmetry between heat and work. The domain of the Second Law must now begin to spread outward from the steam engine and to claim its own. By the end of the book, we shall see that it will have claimed life itself.

2 THE SIGNPOST OF CHANGE#

This is where we begin to define and refine corruption. So far we have seen that the immediate successors of Carnot were able to disentangle a rule about the quantity of energy from a rule about the direction of its conversion. Energy displaced heat as the eternally conserved; heat and work, hitherto regarded as equivalent, were shown to be dissymmetric. But these are bald, imprecise, and incomplete remarks: we must now sharpen them and put ourselves in a position to explore their ramifications. This we shall do in two stages. First, briefly, we shall refine the notions of heat and work, which so far we have regarded as “obvious” quantities. Then, with the precision such refinement will bring to the discussion, we shall start our main business, the refinement of the statement of the Second Law. With that refinement will come power and, as often happens, corruption too. We shall see that the domain of the Second Law is corruption and decay, and we shall see what extraordinarily wonderful things take place when quality gives way to chaos.

The Nature of Heat and Work#

Central to our discussion so far, and for the next couple of chapters, are the concepts of heat and work. Perhaps the most important contribution of nineteenth-century thermodynamics to our comprehension of their nature has been the discovery that they are names of methods, not names of things. The early nineteenth-century view was that heat was a thing, the imponderable fluid “caloric”; but now we know that there is no such “thing” as heat. You cannot isolate heat in a bottle or pour it from one block of metal to another. The same is true of work: that too is not a thing; it can be neither stored nor poured.

Both heat and work are terms relating to the transfer of energy. To heat an object means to transfer energy to it in a special way (making use of a temperature difference between the hot and the heated). To cool an object is the negative of heating it: energy is transferred out of the object under the influence of a difference in temperature between the cold and the cooled. It is most important to realize, and to remember throughout the following pages (and maybe beyond), that heat is not a form of energy: it is the name of a method for transferring energy.

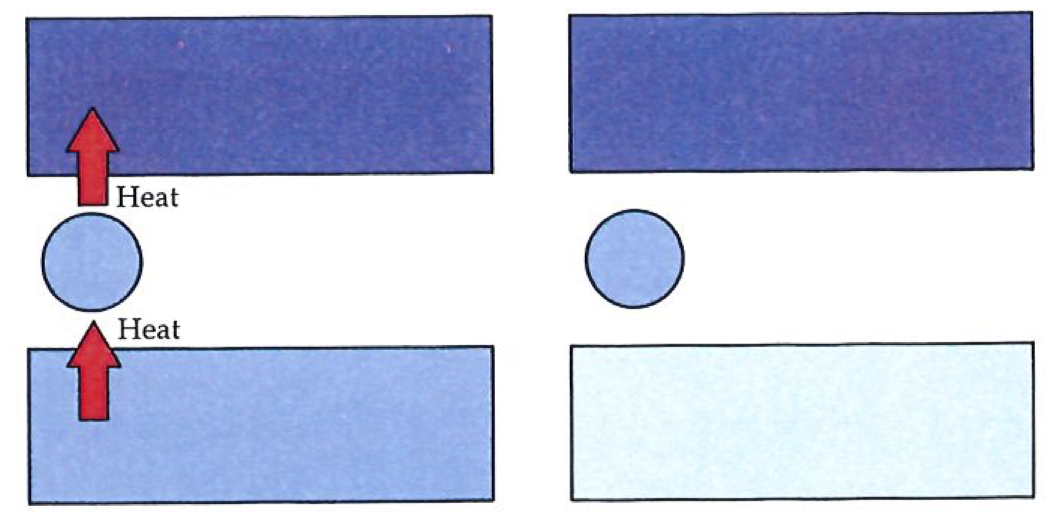

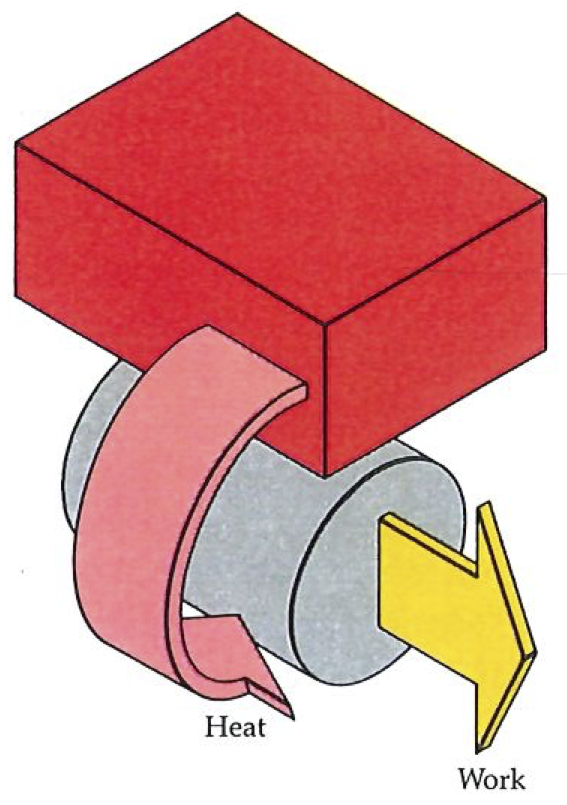

The Kelvin statement of the Second Law denies the possibility of converting a given quantity of heat completely into work without other changes occurring elsewhere.#

The same is true of work. Work is what you do when you need to change the energy of an object by a means that does not involve a temperature difference. Thus, lifting a weight from the floor and moving a truck to the top of a hill involve work. Like heat, work is not a form of energy: it is the name of a method for transferring energy.

All that having been established, we are going to return to informality again. In chapter 1 we said things like “heat was converted into work”. If we were to speak precisely, we would have to say “energy was transferred from a source by heating and then transferred by doing work.” But such precision would sink this account under a mass of verbiage; so we shall use the natural English way of talking about heat and work, and use expressions such as “heat flows into the system…” But whenever we do, we shall always add in a whisper, “but we know what we really mean”.

The Seeds of Change#

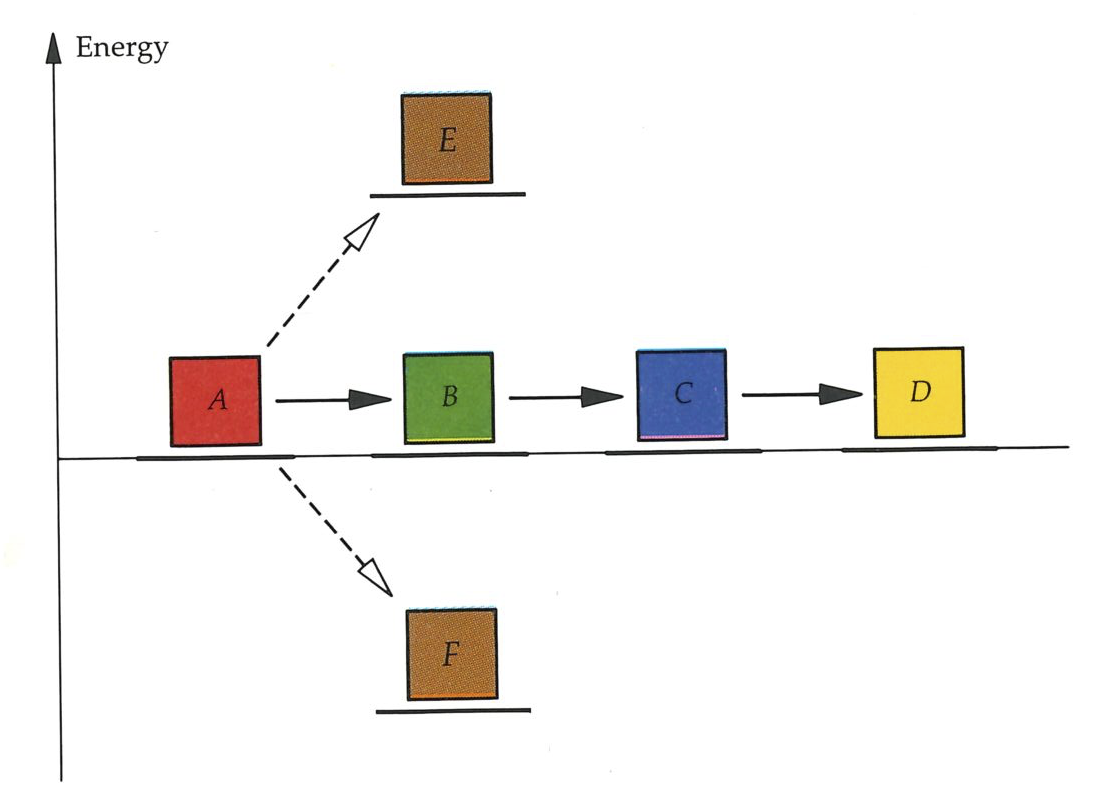

Now we refine the Second Law into a constructive tool. So far it has crept mouselike into the discussion as a not particularly impressive commentary on some not particularly interesting experience with engines. Cold sinks, we have seen, are necessary when we seek to convert heat into work. The formal restatement of this item of experience is known as the Kelvin statement of the Second Law:

Second Law: No process is possible in which the sole result is the absorption of heat from a reservoir and its complete conversion into work.

The most important point to pick out of this statement of the Second Law is the dissymmetry of Nature that we have already mentioned. It states that it is impossible to convert heat completely into work (see figure up, on left); it says nothing about the complete conversion of work into heat. Indeed, as far as we know, there is no constraint on the latter process: work may be completely converted into heat without there being any other discernable change. For example, frictional effects may dissipate the work being done by an engine, as when a brake is applied to a wheel. All the energy being transferred into the outside world by the engine may be dissipated in this way. Here, then, is Nature’s fundamental dissymmetry; for although work and heat are equivalent in the sense that each is a manner of transferring energy, they are not equivalent in the manner in which they may interchange. We shall see that the world of events is the manifestation of the dissymmetry expressed by the Second Law.

The Kelvin statement should not be construed too broadly. It denies the existence of processes in which heat is extracted from a source, and converted completely into work, there being no other change in the Universe. It does not deny that heat can be completely converted into work when other changes are allowed to take place too. Thus cannons can fire cannonballs: the heat generated by the combustion of the charge is turned completely into the work of lifting the ball; however, cannons are literally one-shot processes, and the state of the system is quite different after the conversion (for instance, the volume of the gas that propelled the ball from the cannon remains large, and is not recompressed; cannons are not cycles).

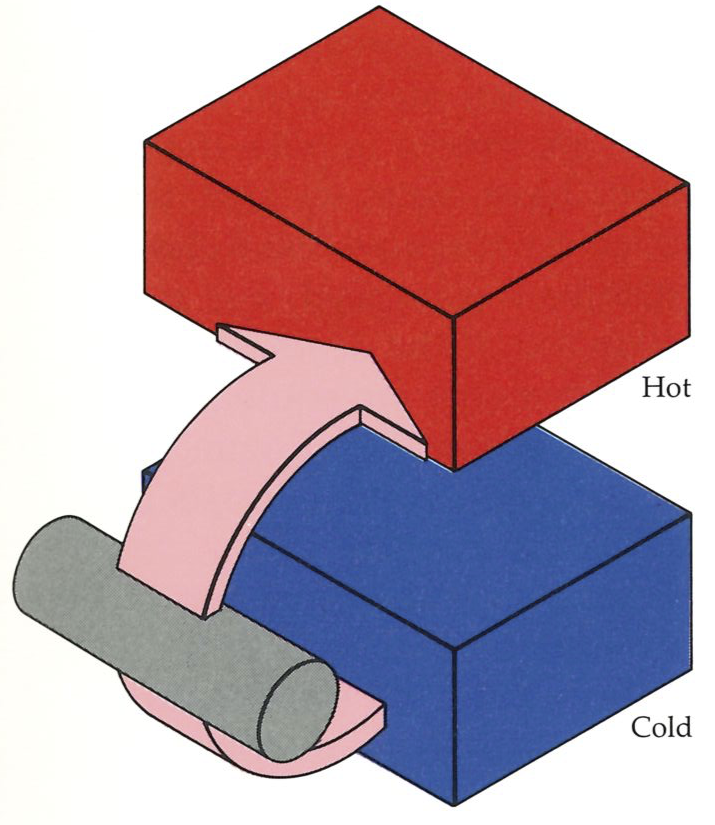

The Clausius statement of the Second Law denies the possibility of heat flowing spontaneously from a cold body to one that is hotter.#

One delight of thermodynamics is the way in which quite unrelated remarks turn out to be equivalent. This is the way the subject creeps over the landscape of events and digests them. Now the mouse can begin to grow and claim its own.

As an example of this process of incorporation, which allows the Second Law to spread away from the steam engine, we shall set in apparent opposition to the Kelvin statement of the Second Law the rival formulation devised by Clausius:

Second Law: No process is possible in which the sole result is the transfer of energy from a cooler to a hotter body.

First, note that the Clausius statement can stand on its own as a summary of experience: so far as we know, no one has ever observed energy to transfer spontaneously (that is, without external intervention) from a cool body to a hot body (see figure on left). The laws of thermodynamics ignore, of course, the sporadic reports of purported miracles, and its proven predictive power is a retrospective argument against their occurrence. The fact that we need to construct elaborate devices to bring about refrigeration and air conditioning, and must run them by using electric power, is a practical manifestation of the validity of the Clausius statement of the Second Law: for although heat will not spontaneously flow to a hotter body, we can cause it flow in an unnatural direction if we allow changes to take place elsewhere in the Universe. In particular, a refrigerator operates at the expense of a burning lump of coal, a stream of falling water, or an exploding nucleus elsewhere. The Second Law specifies the unnatural, but does not forbid us to bring about the unnatural by means of a natural change elsewhere.

Second, the Clausius statement, like the Kelvin statement, identifies a fundamental dissymmetry of Nature, but ostensibly a different dissymmetry. In the Kelvin statement the dissymmetry is that between work and heat; in the Clausius statement there is no overt mention of work. The Clausius statement implies a dissymmetry in the direction of natural change: energy may flow spontaneously down the slope of temperature, not up. The twin dissymmetries are the anvils on which we shall forge the description of all natural change.

But there cannot be two Second Laws of thermodynamics: if the twin dissymmetries of Nature are both to survive, they must be the outcome of a single Second Law or at least one that should be expressed more richly than either the Kelvin or the Clausius statement alone. In fact, the two statements, although apparently different, are logically equivalent: there is indeed only one Second Law, and it may be expressed as either statement alone. The twin dissymmetries, and the anvils, are really one.

In order to show that the two statements are equivalent, we use the logical device of demonstrating that the Kelvin statement implies the Clausius statement, and that the Clausius statement implies the Kelvin. Actually, in the slippery way that logicians have, what we shall do is exactly the opposite: we shall show that if we can disprove the Kelvin statement, then the falsity of the Clausius statement is implied, and if we can disprove the Clausius, then farewell Kelvin too. If the death of either one implies the death of the other, then the statements are equivalent.

For our purposes, we bring on the family Rogue: Jack Rogue, the purveyor of anti-Kelvin devices, and Jill Rogue, whose line consists of antiClausius devices. First Jack will present his wares.

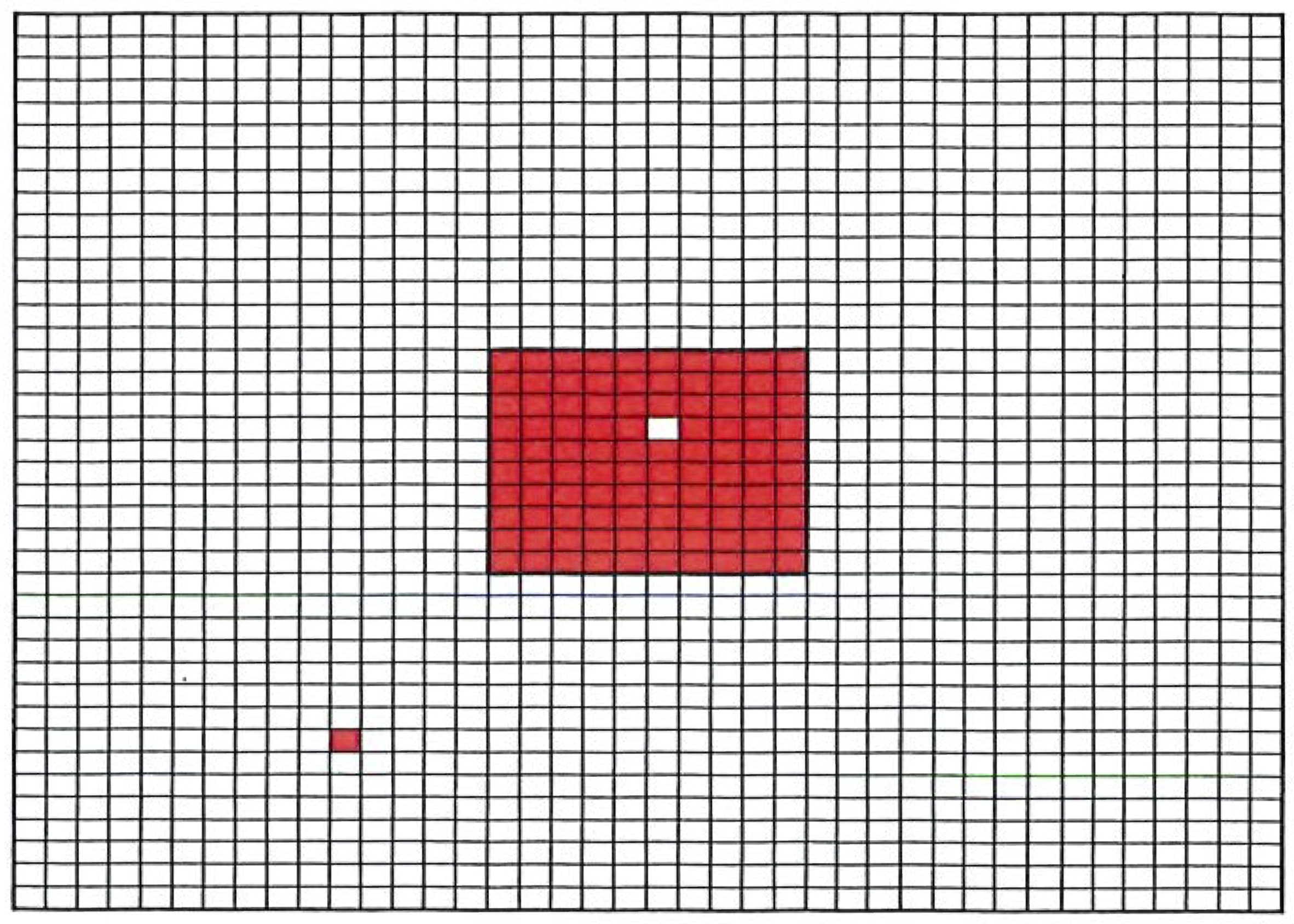

We take Jack’s device, which he claims is an engine that contravenes Kelvin’s experience, and can convert heat entirely into work and produce no change elsewhere, and we connect it between a hot source and a cold sink (see figure on facing page). We also connect it to another (conventional) engine, which will be run as a refrigerator and used to pump energy from the same cold sink to the same hot source. According to Jack, all the heat drawn from the hot source is converted into work. Suppose, then, that we run the engine long enough to remove 100 joules of energy

The units for expressing quantities of energy, whether they are simply stored or are being shipped as heat or as work, are explained in Appendix 1 . We shall use joules.

The argument to show that a failure of the Kelvin statement implies a failure of the Clausius statement involoes connecting an ordinary engine between two reservoirs and driving it with an anti-Kelvin device. The net effect of the flows of energy shown here is to transport heat spontaneously from the cold to the hot reservoir, contradicting Clausius.

Happy Jill now shows her device, which, she claims, spontaneously pumps heat from a cold sink to a hot source and leaves no change elsewhere. As was done with Jack’s, Jill’s device is connected between a hot source and a cold sink, and another engine is also connected between the two (see figure on next page). Jill runs her device, which pumps 100 joules of energy from cold to hot, and does so without any interference from outside, thus denying Clausius’s experience of life. The other engine is arranged to run, and to dump 100 joules of energy into the cold sink, providing the balance of whatever it draws from the hot source as work.

Toward Corruption#

The progress of science is marked by the transformation of the qualitative into the quantitative. In this way not only do notions become turned into theories and lay themselves open to precise investigation, but the logical development of the notion becomes, in a sense, automated. Once a notion has been assembled mathematically, then its implications can be teased out in a rational, systematic way. Now, we have promised that this account of the Second Law will be non mathematical, but that does not mean we cannot introduce a quantitative concept. Indeed, we have already met several, temperature and energy among them. Now is the time to do the same thing for spontaneity.

The idea behind the next move can be described as follows. The Zeroth Law of thermodynamics refers to the thermal equilibrium between objects (“objects”, the things at the center of our attention, are normally referred to as systems in thermodynamics, and we shall use that term from now on). Thermal equilibrium exists when system

The First Law gives us a reason to carry out a similar procedure, but now one that leads to the idea of “energy”. We may be interested in what states a system can reach if we heat it or do work on it. We can assess whether a particular state is accessible from the starting condition by introducing the concept of energy. If the new state differs in energy from the initial state by an amount that is different from the quantity of work or heating that we are doing, then we know at once, from the First Law, that that state cannot be reached: we have to do more or less work, or more or less heating, in order to bring the energy up to the appropriate value. The energy of a system is therefore a property we can use for deciding whether a particular state is accessible.

This suggests that there may be a property of systems that could be introduced to accommodate what the Second Law is telling us. Such a property would tell us, essentially at a glance, not whether one state of the system is accessible from the other (that is the job of the energy acting through the First Law), but whether it is spontaneously accessible. That is, there ought to be a property that can act as the signpost of natural, spontaneous change, change that may occur without the need for our technology to intrude into the system in order to drive it.

There is such a property. It is the entropy of the system, perhaps the most famous and awe-inspiring thermodynamic property of all. Awe-inspiring it may be: but the awe should not be misplaced. The awe for entropy should be reserved for its power, not for its difficulty. The fact that in everyday discourse “entropy” is a word far less common than “energy” admittedly makes it less familiar, but that does not mean that it stands for a more difficult concept. In fact, I shall argue (and in the next chapter hope to demonstrate) that the entropy of a system is a simpler property to grasp than its energy! The exposure of the simplicity of entropy, however, has to await our encounter with atoms. Entropy is difficult only when we remain on the surface of appearances, as we do now.

Entropy#

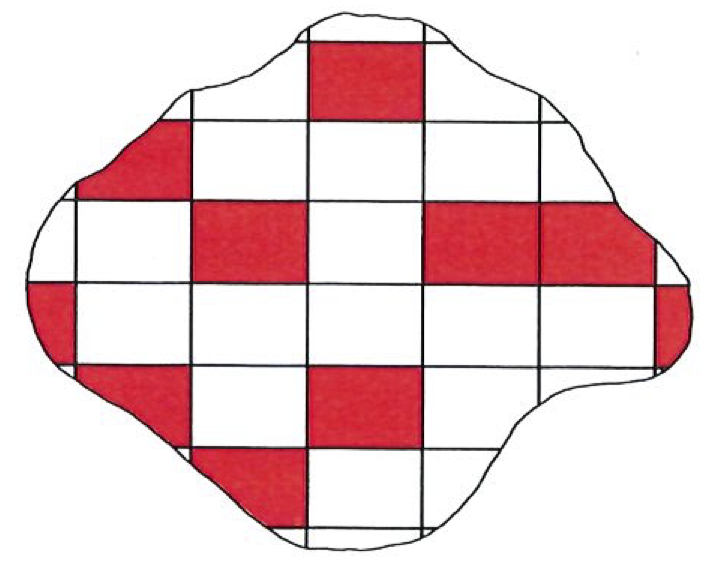

We are now going to build a working definition of entropy, using the information we already have at our disposal. The First Law instructs us to think about the energy of a system that is free from all external influences; that is, the constancy of energy refers to the energy of an isolated system, a system into which we cannot penetrate with heat or with work, and which for brevity we shall refer to as the universe (see figure on facing page). Similarly, the entropy we define will also refer to an isolated system, which we shall call the universe. Such names reflect the hubris of thermodynamics: later we shall see to what extent the “universe” is truly the Universe.

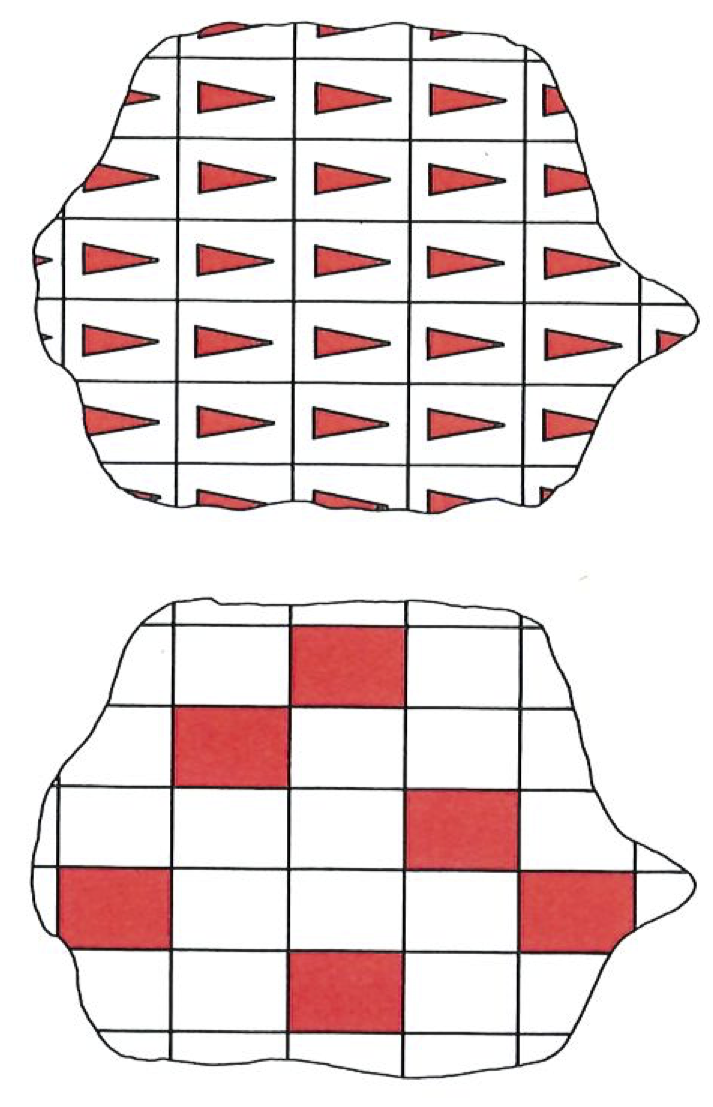

In thermodynamics we focus attention on a region called the system. Around it are the surroundings. Together the two constitute the universe. In practice, the universe may be only a tiny fragment of the Universe itself, such as the interior of a thermally insulated, closed container, or a water bath maintained at constant temperature.

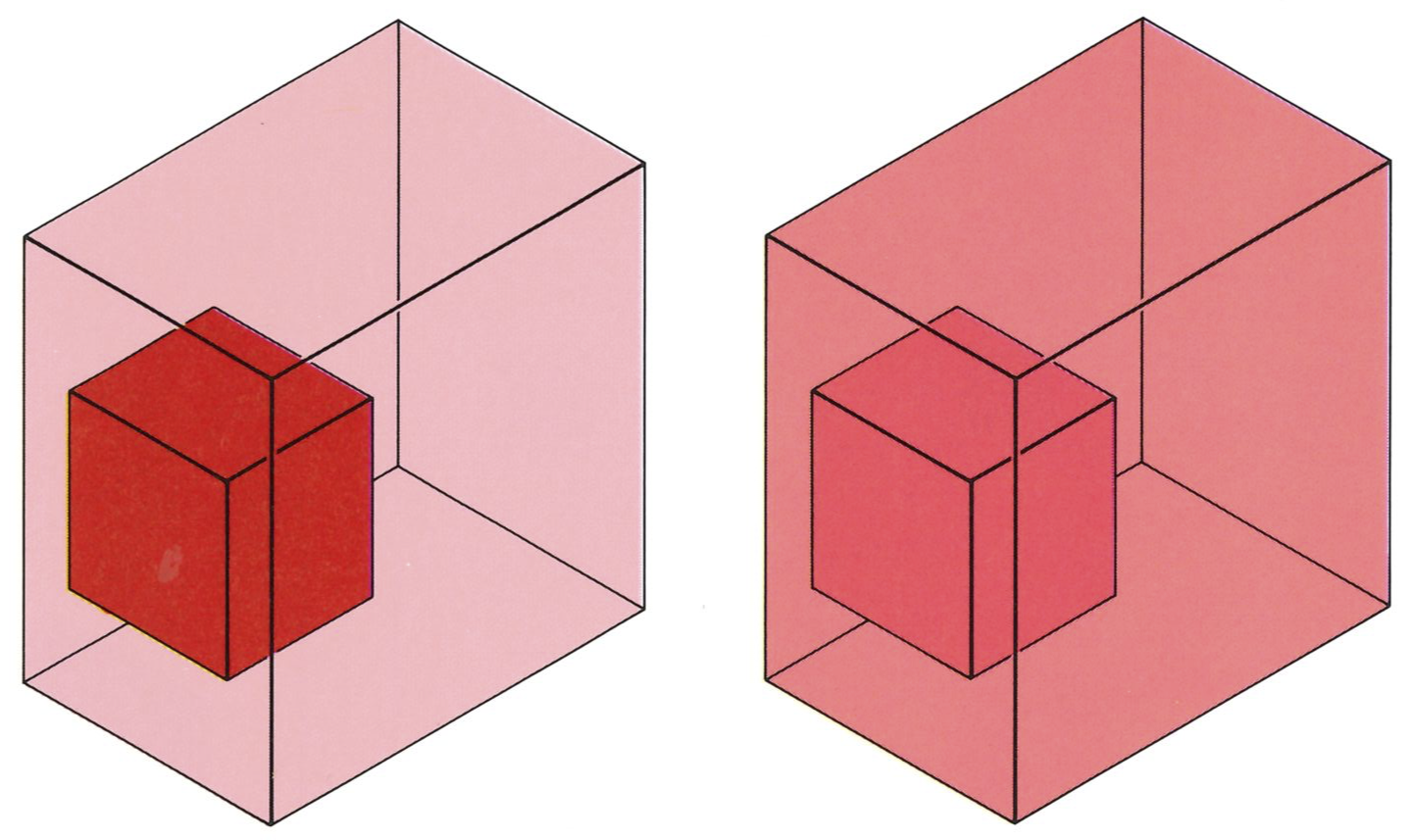

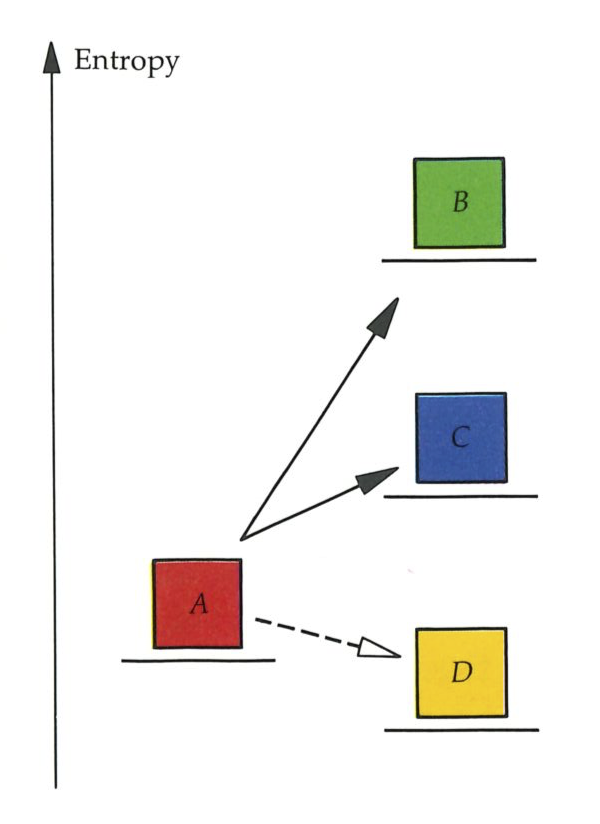

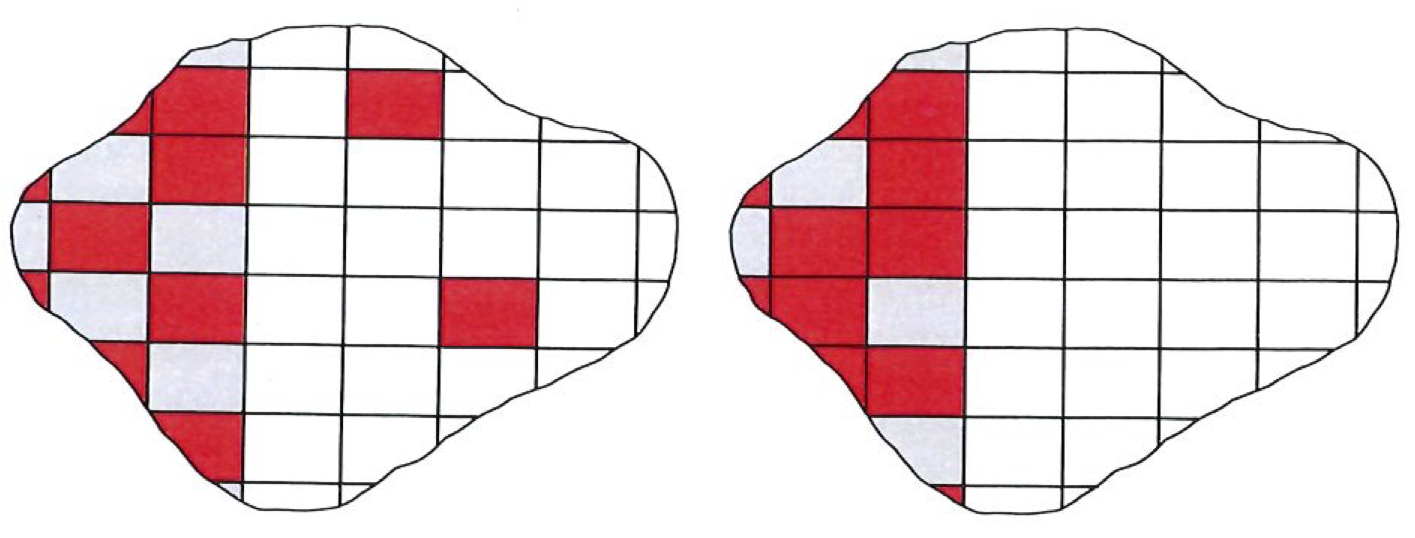

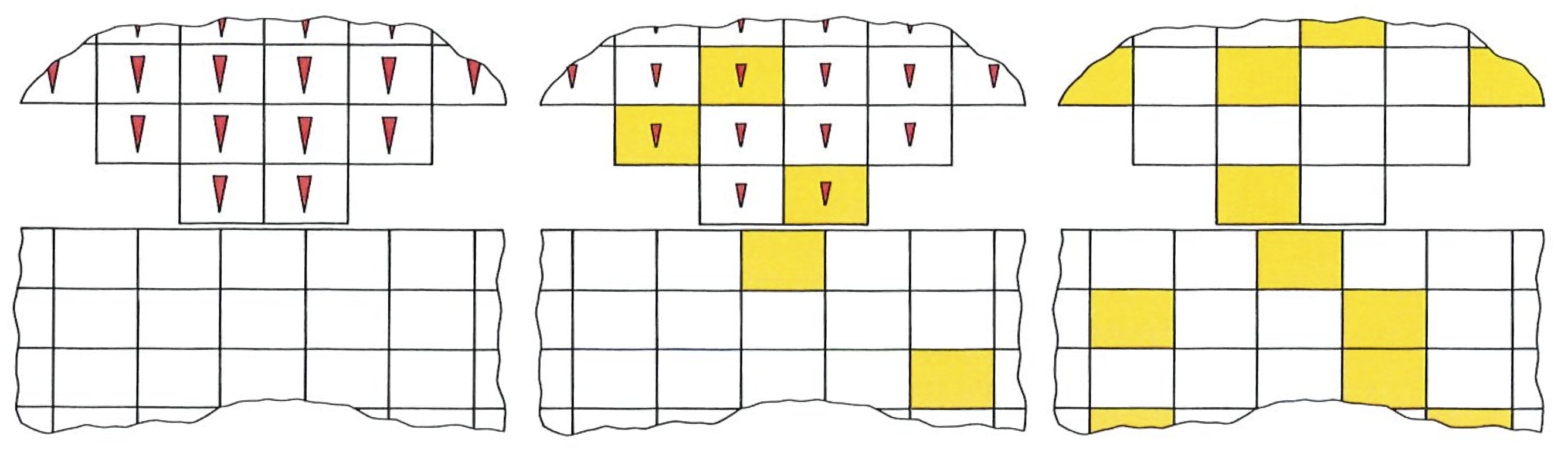

Suppose there are two states of the universe; for instance, in one a block of metal is hot, and in the other it is cold (see top figure on next page). Then the First Law tells us that the second state can be reached from the first only if the total energy of the universe is the same for each. The Second Law examines not the label specifying the energy of the universe, but another label that specifies the entropy. We shall define the entropy so that if it is greater in state

We have to construct a definition of entropy in such a way that in any universe entropy increases for natural changes, and decreases for changes that are unnatural and have to be contrived.

An isolated system (a universe) containing a hot block of metal is in a different state from one containing a similar but cold block, even if the total energy is the same in each. There must be a property other than total energy that deter mines the direction of spontaneous change will be hot

Furthermore, we want to define it so that we capture the Clausius and Kelvin statements of the Second Law, and arrive at a way of expressing them both simultaneously in the following single statement:

Second Law: Natural processes are accompanied by an increase in the entropy of the universe.

The states

This is sometimes referred to not as the Second Law (which is properly a report on direct experience), but as the entropy principle, for it depends on a specification of the property “entropy,” which is not a part of direct experience. (Similarly, the statement “energy is conserved” is also more correctly referred to as the energy principle, for the First Law itself is also a commentary on direct experience of the changes that work can bring about, whereas the more succinct statement depends on a specification of what is meant by “energy.”)

The Kelvin statement is reproduced by the entropy principle if we define the entropy of a system in such a way that entropy increases when the system is heated, but remains the same when work is done. By implication, when a system is cooled its entropy decreases. Then Jack’s engine is discounted by the Second Law, because heat is taken from a hot source (so that its entropy declines), and work is done on the surroundings (with the result that the entropy of the surroundings remains the same), as shown in the top figure on the facing page, and so overall the entropy of the little engine is that contains his engine and its surroundings decreases; hence his engine is unnatural.

In order for us to discount Jill’s device, the definition of entropy must depend on the temperature. We can capture her (and Clausius) if we suppose that the higher the temperature at which heat enters a system, the smaller the resulting change of entropy. In her anti-Clausius device, heat leaves the cold system, and the same quantity is dumped into the hot. Since the temperature of the cold reservoir is lower than that of the hot, the reduction of its entropy (see below) is greater than the increase of the entropy of the hot reservoir; so overall Jill’s device reduces the entropy of the universe, and it is therefore unnatural.

Now the net is beginning to close in on natural change. We have succeeded in capturing Jack and Jill jointly on a single hook, just as we have claimed that the entropy principle captures the two statements of the Second Law. From now on we should be able to discuss all natural change in terms of the entropy.

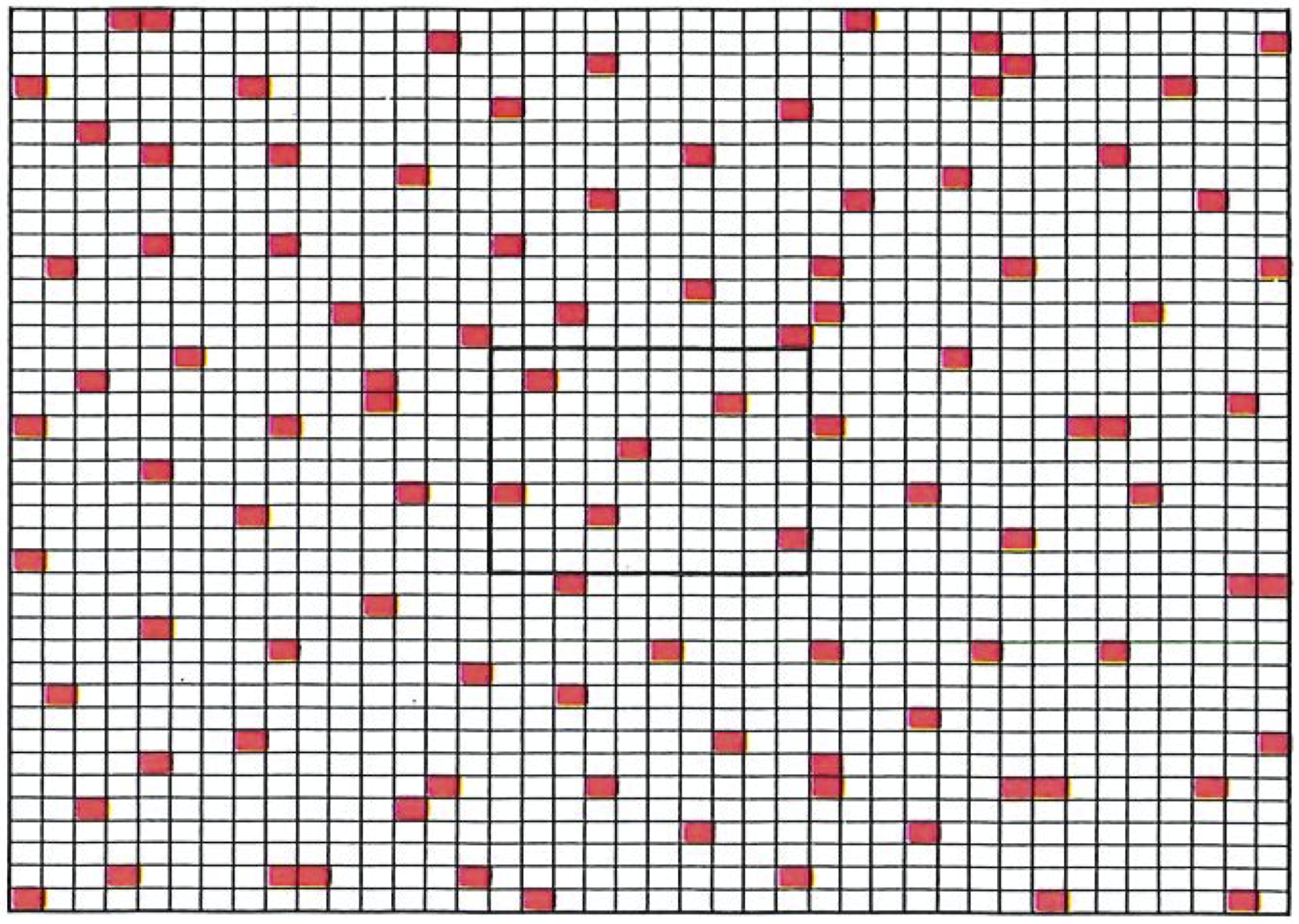

As in the illustration above, the shade of blue denotes the entropy. When heat is withdrawn from the cold reservoir, its entropy drops; when the same quantity of heat enters the hot reservoir, its entropy barely changes. Overall, therefore, the entropy of the universe declines, which is also against experience.

Yet we are still hovering on the brink of actually defining entropy! Now is the time to take the plunge. We have seen that entropy increases when a system is heated; we have seen that the increase is greater the lower the temperature. The simplest definition would therefore appear to be:

Happily, with care, this definition works.

First, let us make sure this definition captures what we have already done. If energy is supplied by heating a system, then Heat supplied is positive, and so the change of entropy is also positive (that is, the entropy increases). Conversely, if the energy leaks away as heat to the surroundings, Heat supplied is negative, and so the entropy decreases. If energy is supplied as work and not as heat, then Heat supplied is zero, and the entropy remains the same. If the heating takes place at high temperature, then Temperature has a large value; so for a given amount of heating, the change of entropy is small. If the heating takes place at low temperatures, then Temperature has a small value; so for the same amount of heating, the change of entropy is large. All this is exactly what we want.

Now for the care in the use of the definition. The temperature must be constant throughout the transfer of the energy as heat (otherwise the formula would be meaningless). Generally a system gets hotter (that is, its temperature will rise) as heating proceeds. However, if the system is extremely large (for example, if it is connected to all the rest of the actual Universe), then however much heat flows in, its temperature remains the same. Such a component of the universe is called a thermal reservoir. Therefore we can safely use the definition of the change of entropy only for a reservoir. That is the first limitation (it may seem extreme, but we shall A second point concerns the manner in a moment).

A second point concerns the manner in which energy is transferred. Suppose we allow an engine to do some work on its surroundings. Unless we are exceptionally careful, the raising of the weight, the turning of the crank, or whatever, will give rise to turbulence and vibration, which will fritter energy away by friction and in effect heat the surroundings. In that the change in entropy. In order to eliminate this from the definition (but once again only in order to clarify the definition, not to eliminate dissipative processes from the discussion), we must specify how the energy is to be transferred. The energy must be transferred without generating turbulence, vortices, and eddies. That is, it has to be done infinitely carefully: pistons must be allowed to emerge infinitely slowly, and energy must be allowed to seep down a temperature gradient infinitely slowly. Such processes are then called quasistatic: they are the limits of processes carried out with ever-increasing care.

Measuring the Entropy#

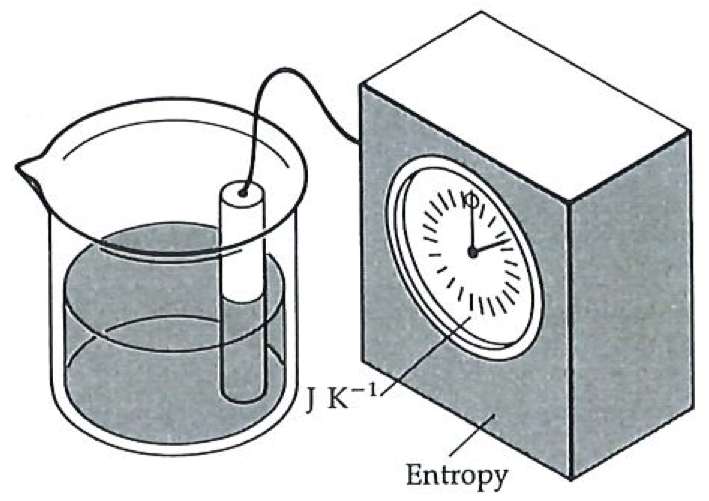

We have a definition of entropy, but the definition does not seem to give the concept much body. Although we regard properties such as temperature and energy to be “tangible” (but we do so merely because they are familiar), the idea of entropy as

But is temperature really so familiar, and entropy so remote? We think of a liter of hot water and a liter of cold water as having different temperatures. In fact, they also have different entropies, and the “hot” water has both a higher entropy and a higher temperature than the cold water. The fact that hot water added to cold results in tepid water is a consequence of the change of entropy. Should we think then of “hotness” as denoting high temperature or as denoting high entropy? With which concept are really familiar?

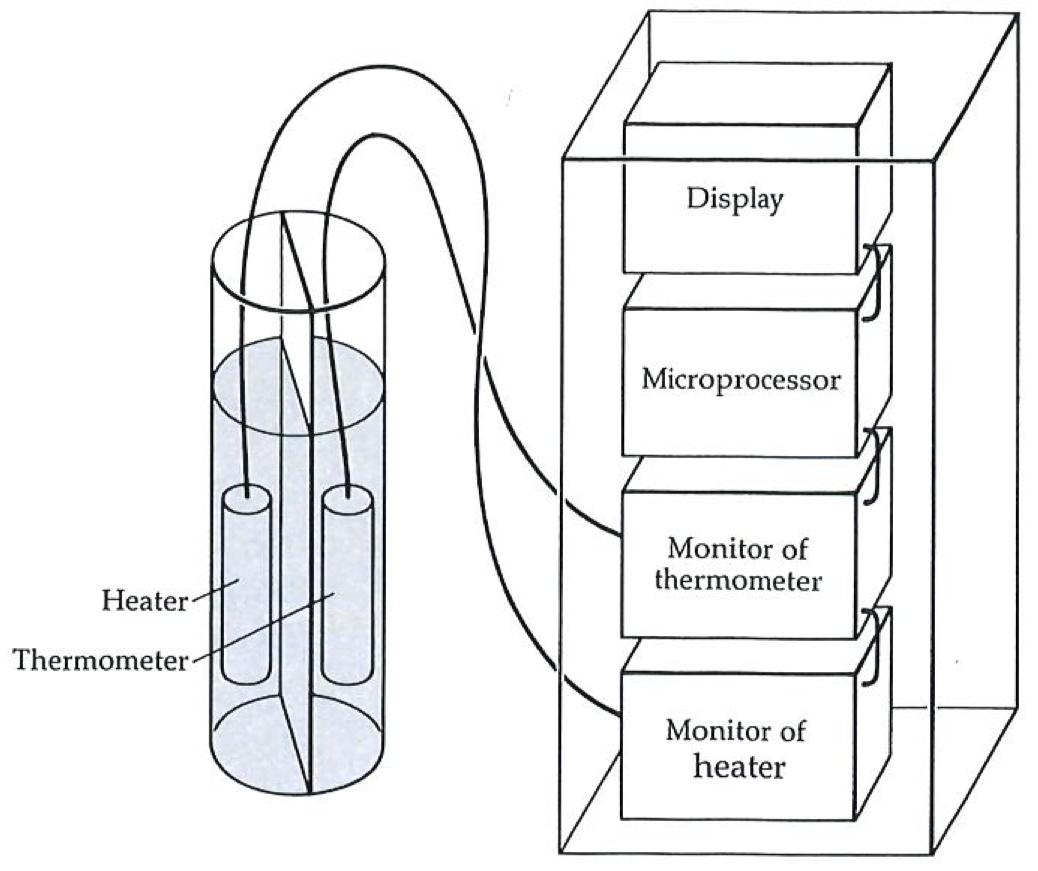

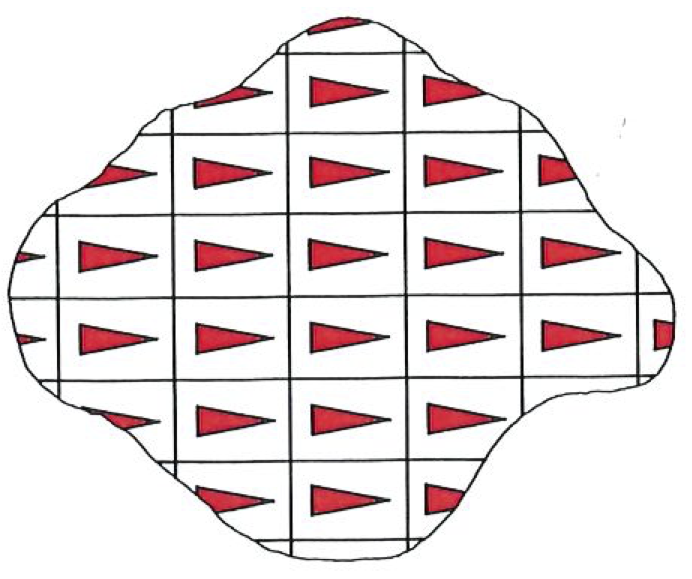

An entropy meter consists of a probe in the sample and a pointer giving a reading on a dial, exactly like a thermometer.#

Temperature seems familiar because we can measure it: we feel at home with pointer readings, and often mistake the reading for the concept. Take time, for instance: the pointer readings are an everyday commonplace, but the essence of time is much deeper. So it is with temperature; although it seems familiar, the nature of temperature is a far more subtle concept. The difficulty with accepting entropy is that we are not familiar with instruments that measure it, and consequently we are not familiar with their pointer readings. The essence of entropy, when we get to it, is certainly no more difficult, and may be simpler, than the essence of temperature. What we need, therefore, in order to break down the barrier between us and entropy, is an entropy meter.

The figure to the left shows an entropy meter; the figure on the next page indicates the sort of mechanism that we might find inside it: it is basically a thermometer attached to a microprocessor. The readings can be taken from the digital display.

Suppose we want to measure the entropy change when a lump of iron is heated. All we need do is attach the entropy meter to the lump, and start heating: the microprocessor monitors the temperature indicated by the thermometer, and converts it directly into an entropy change. What calculations it does we shall come to in a moment. The care we have to exercise is to do the heating extremely slowly, so that we do not create hot spots and get a distorted reading: the heating must be quasistatic.

The interior of the entropy meter is more complicated than that of a simple mercury thermometer. The probe consists of a heater (whose output is monitored by the rest of the meter) and a thermometer (which is also monitored). The microprocessor is programmed to do a calculation based on how the temperature of the sample depends on the heat supplied by the heater. The output shown on the dial is the entropy change of the sample between the starting and finishing temperatures.

The microprocessor is programmed as follows. First, it has to work out, from the rise in temperature caused by the heating, the quantity of energy that has been transferred to the lump from the heater. That is a fairly straightforward calculation once we know the heat capacity (the specific heat) of the sample, because the temperature rise is directly proportional to the heat supplied:

the coefficient being related to the heat capacity. (We could always measure the heat capacity in a separate experiment, with the same apparatus, but with a different program in the microprocessor.) The heater supplies only a trickle of energy to the sample, and the microprocessor evaluates

The procedure continues: the thermometer records, the microprocessor goes on dividing and adding, and the heating continues until at long last (in a perfect experiment, at the other end of eternity) the temperature has risen to the final value. The microprocessor then displays the accumulated sum of all the little values of

The entropy meter works by squirting tiny quantities of heat into the sample, and monitoring the temperature. It then evaluates

That is as far as we need go for now. What I want to establish here is not so much the details of how the entropy change is measured in any particular process, but the fact that it is a measurable quantity, exactly like the temperature, and, indeed, that it can be measured with a thermometer too!

The Dissipation of Quality#

We can edge closer to complete understanding by reflecting on the implications of what this external view of entropy already reveals about the nature of the world. As a first step, we shall see how the introduction of entropy leads to a particularly important interpretation of the role of energy in events.

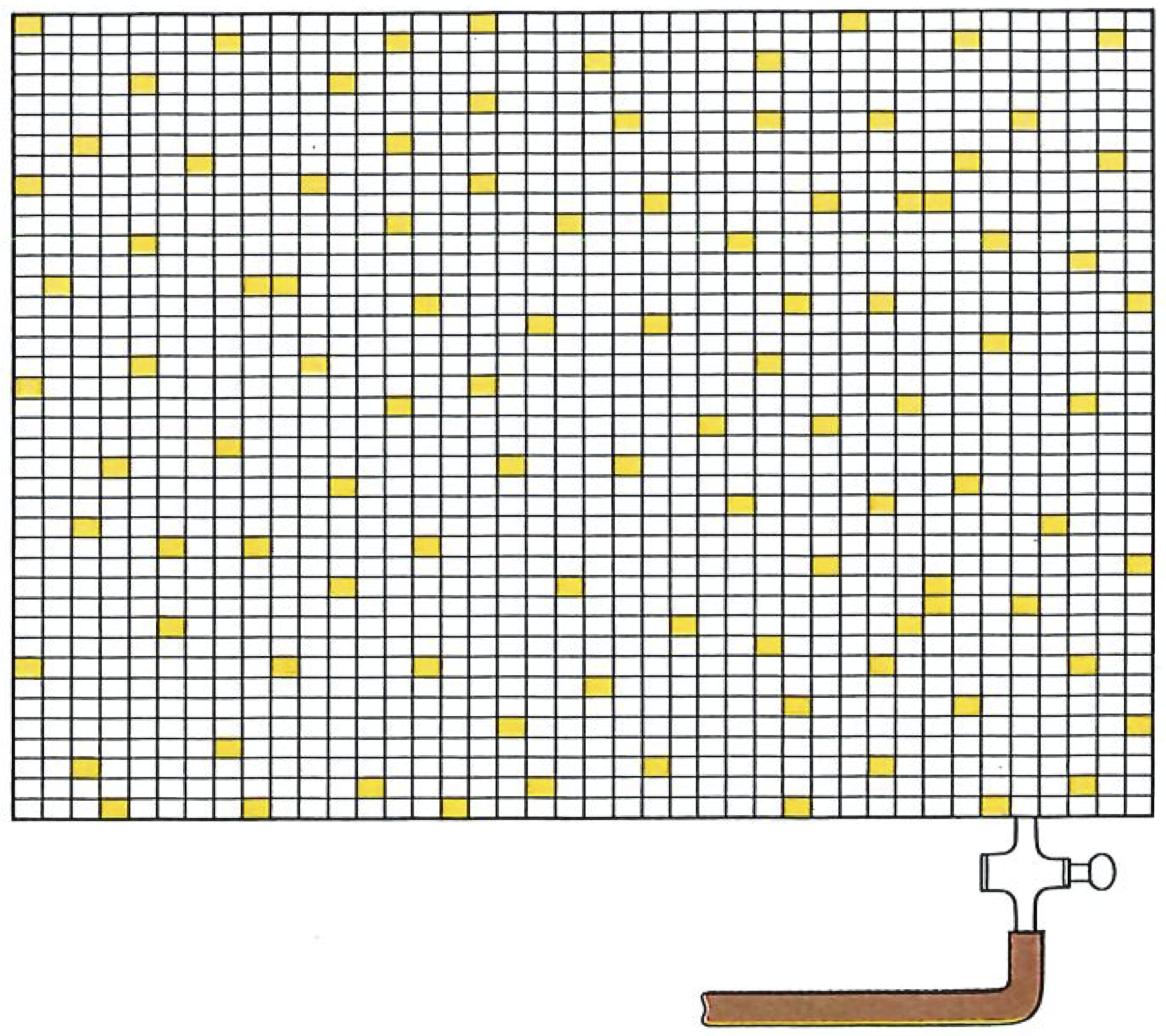

Suppose we have a certain amount of energy that we can draw from a hot source, and an engine to convert it into work. We know that the Second Law demands that we have a cold sink too; so we arrange for the engine to operate in the usual way. We can extract the appropriate quantity of work, and pay our tax to Nature by dumping a contribution of energy as heat into the cold sink. The energy we have dumped into the cold sink is then no longer available for doing work (unless we happen to have an even colder reservoir available). Therefore, in some sense, energy stored at a high temperature has better “quality”: high-quality energy is available for doing work; low-quality energy, corrupted energy, is less available for doing work.

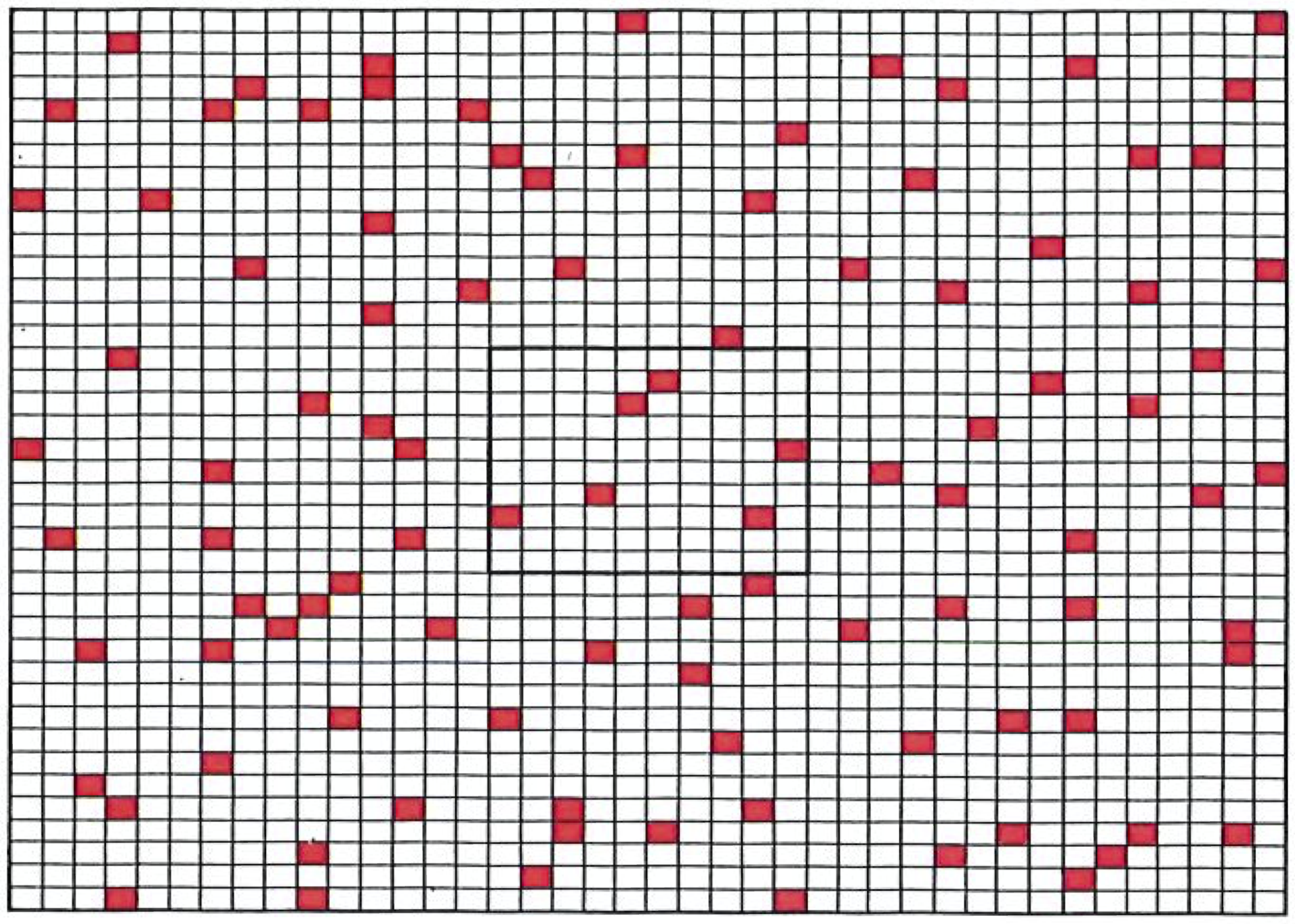

Some heat must be discarded into a cold sink in order for us to generate enough entropy to overcome the decline taking place in the hot reservoir.#

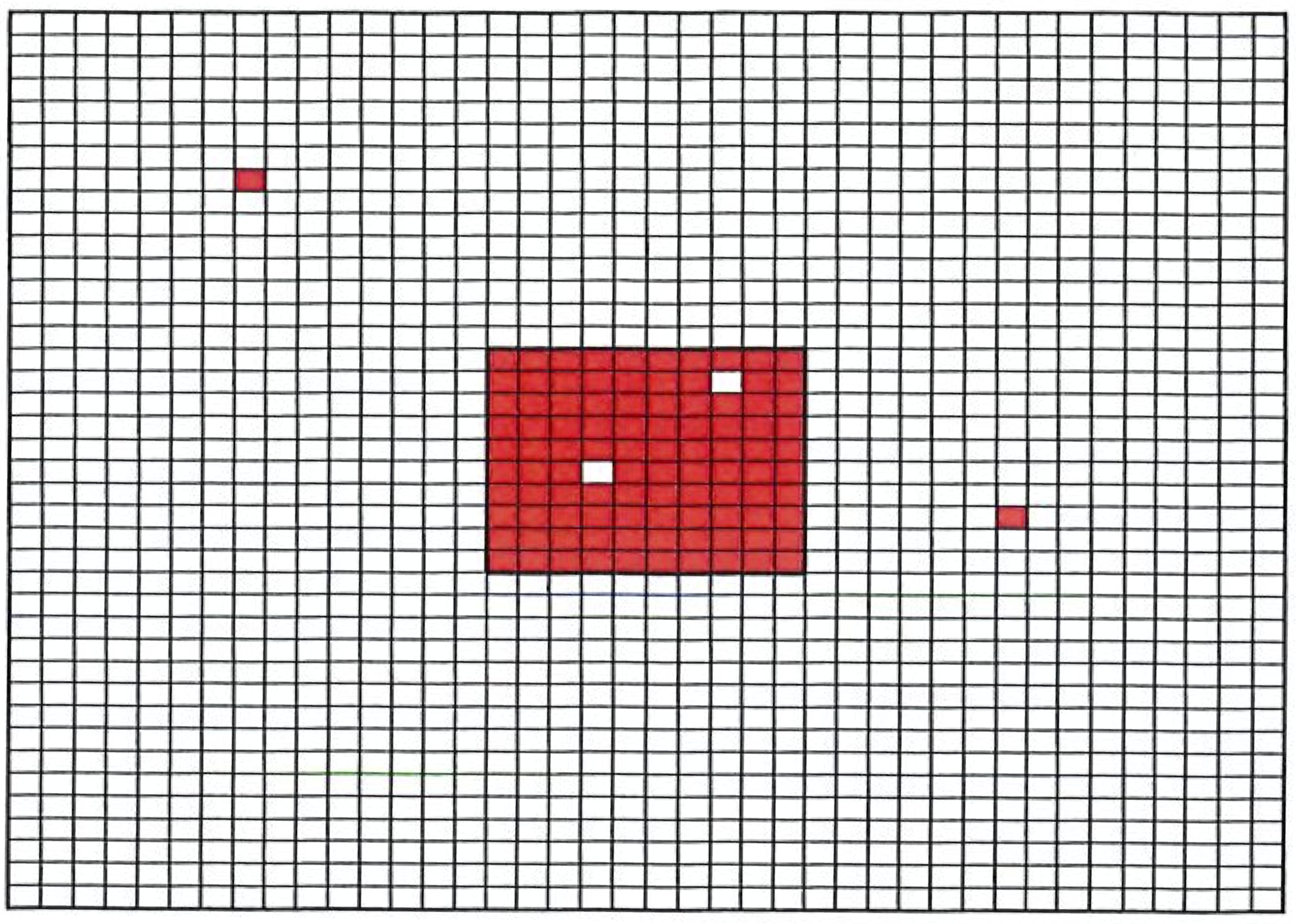

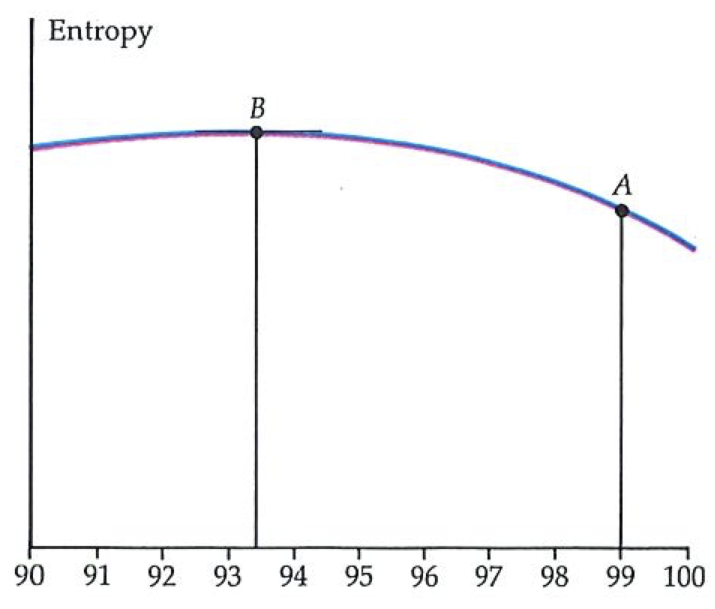

A slightly different way of looking at the quality of energy is to think in terms of entropy. Suppose we withdraw a quantity of energy as heat from the hot source, and allow it to go directly to the cold sink (see the figure to the left). The entropy of the universe decreases by an amount

Just as the increasing entropy of the universe is the signpost of natural change and corresponds to energy being stored at ever-lower temperatures, so we can say that the natural direction of change is the one that causes the quality of energy to decline: the natural processes of the world are manifestations of this corruption of quality.

This attitude toward energy and entropy, that entropy represents the manner in which energy is stored, is of great practical significance. The First Law establishes that the energy of a universe (and maybe of the Universe itself) is constant (perhaps constant at zero). Therefore, when we burn fossil fuels, such as coal, oil, and nuclei, we are not diminishing the supply of energy. In that sense, there can never be an energy crisis, for the energy of the world is forever the same. However, every time we burn a lump of coal or a drop of oil, and whenever a nucleus falls apart, we are increasing the entropy of the world (for all these are spontaneous processes). Put another way, every action diminishes the quality of the energy of the universe.

As technological society ever more vigorously burns its resources, so the entropy of the universe inexorably increases, and the quality of the energy it stores concomitantly declines. We are not in the midst of an energy crisis: we are on the threshold of an entropy crisis. Modern civilization is living off the corruption of the stores of energy in the Universe. What we need to do is not to conserve energy, for Nature does that automatically, but to husband its quality. In other words, we have to find ways of furthering and maintaining our civilization with a lower production of entropy: the conservation of quality is the essence of the problem and our duty toward the future.

Thermodynamics, particularly the Second Law (we shall see the less than benign role of the Third in a moment), indicates the problems in this program of conservation, and also points to solutions. In order to see how this is so, we shall go back to the Carnot cycle, and apply what we have developed here to its operation.

Ceilings to Efficiency#

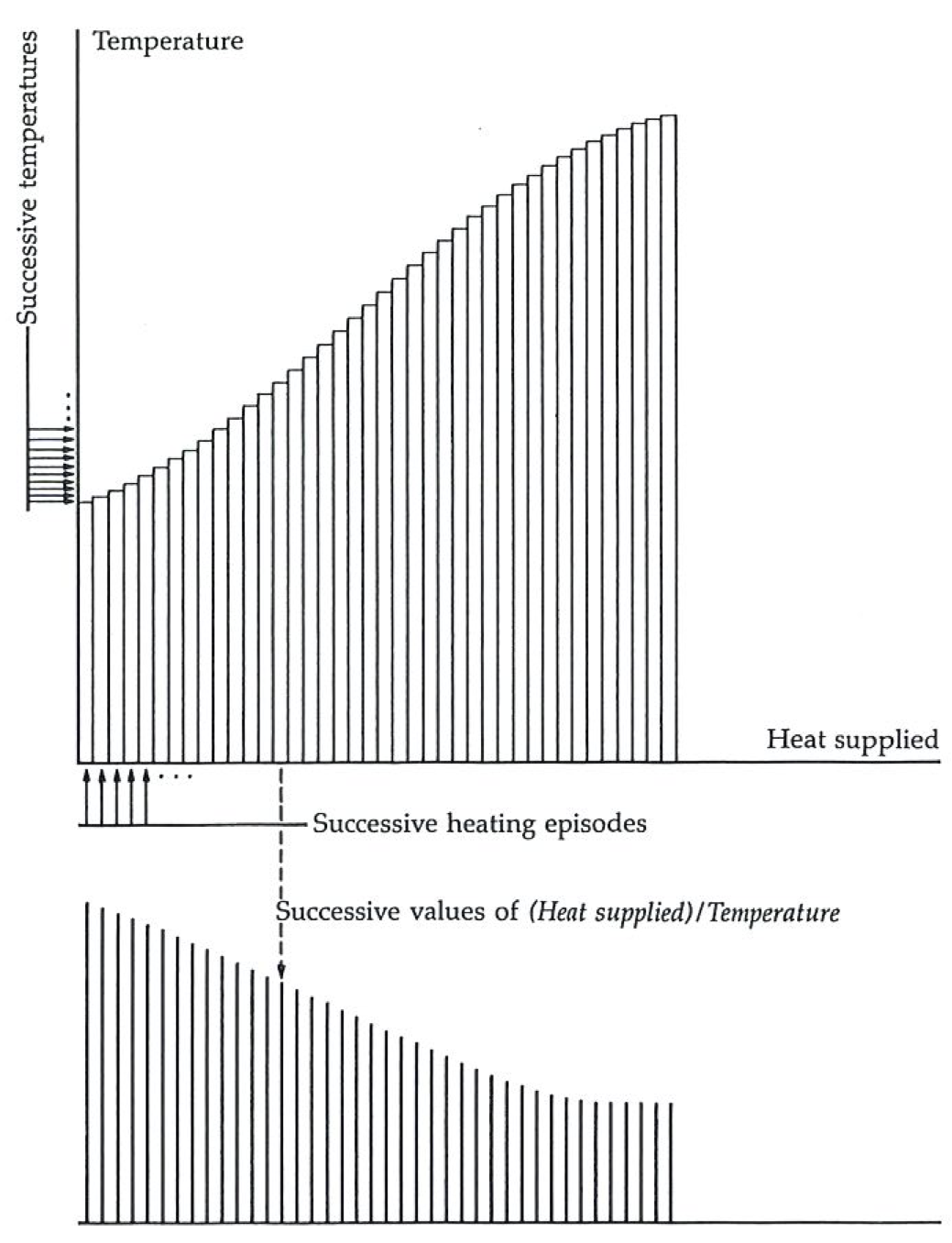

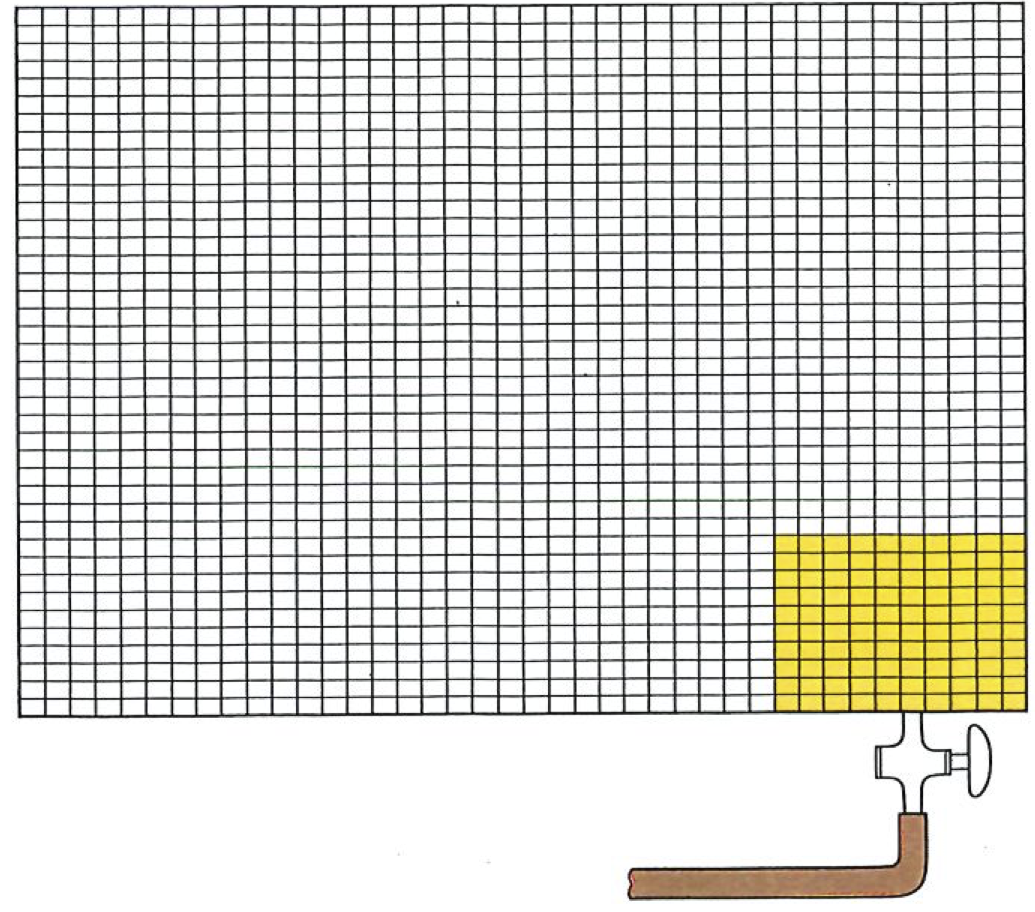

In the first place, if the Carnot engine goes through its cycle, then the entropy change of its little world cannot be negative, for that would signify a nonspontaneous process, and useful engines do not have to be driven. Now, however, we are equipped to calculate the change in entropy, using the formula

The engine itself returns to its initial condition (it is cyclic); so at the end of a cycle it has the same entropy as it had at the beginning. The work it does in the surroundings does not increase their entropy, because everything happens so carefully and slowly in the quasistatic operating regime. The only changes of entropy are in the hot source, the entropy of which decreases by an amount of magnitude

and in the cold sink, the entropy of which increases by an amount of magnitude

And, under quasistatic conditions, that is all. However, overall the change of entropy must not be negative. Therefore the smallest value of the heat discarded into the cold sink must be large enough to increase the entropy there just enough to overcome the decrease in entropy in the hot source. It is straightforward algebra to show that this minimum discarded energy is

Here is our first major result of thermodynamics: we now know how to minimize the heat we throw away: we keep the cold sink as cold as possible, and the hot source as hot as possible. That is why modern power stations use superheated steam: cold sinks are hard to come by; so the most economical procedure is to use as hot a source as possible. That is, the designer aims to use the highest-quality energy.

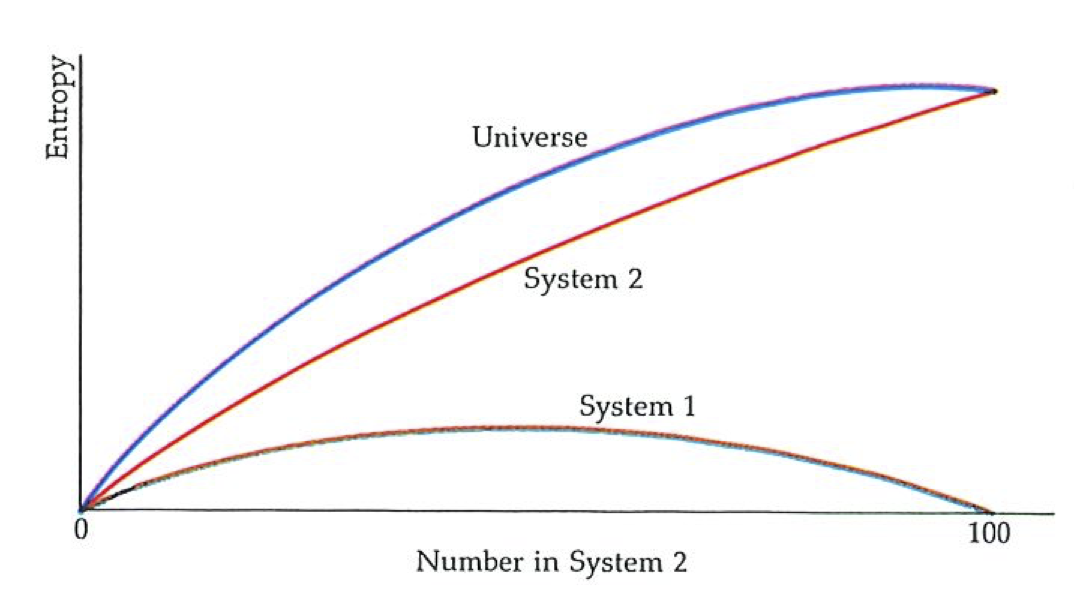

But we can go on, and summon up our second major result. The work generated by the Carnot engine as it goes through its cycle must be equal to the difference between the heats supplied and discarded (this is a consequence of the First Law). The work is therefore equal to Heat supplied minus Heat discarded (see the preceding figure). We are now, however, in a position to express this difference in terms of the Heat supplied multiplied by a factor involving the two temperatures. The efficiency of the engine is the arrive at the result that the efficiency of a Carnot engine, working perfectly between a hot source and a cold sink, is between a hot source and a cold sink, is

That is, the efficiency depends only on the temperatures and is independent of the working material in the engine, which could be air, mercury, steam, or whatever. Most modern power plants for electricity generation use steam at around

Scales of temperature are described in Appendix 1. K denotes kelvin the graduation of the Kelvin scale of temperature (the one of fundamental significance in contrast to the contrived scales of Celsius and Fahrenheit). In brief, a temperature in kelvins is obtained by adding 273 to the temperature in degree Celsius.

The profound importance of the preceding result is that is puts an upper limit on the efficience of engines: whatever clever mechanism is contrived, so long as the engineer is stuck with fixed temperatures for the source and the sink, the efficiency of the engine cannot exceed the Carnot value. The reason why should by now be clear (to the external observer). In order for heat to be converted to work spontaneously, there must be an overall increase in the entropy of the universe. When energy is withdrawn as heat from the hot source, there is a reduction in its entropy. Therefore, since the perfectly operating engine does not itself generate entropy, there must be entropy generated elsewhere. Hence, in order for the engine to operate, there must be a dump for at least a little heat: there must be a sink. Moreover, that sink must be a cold one, so that even a small quantity of heat supplied to it results in a large increase in entropy.

The temperature of the cold sink amplifies the effect of dumping the heat: the lower the temperature, the higher the magnification of the entropy. Consequently, the lower the temperature, the less heat we need to discard into it in order to achieve an overall positive entropy change in the universe during the cycle. Hence the efficiency of the conversion increases as the temperature of the cold source is lowered.

There appears to be a limit to the lowness of temperature. The conversion efficiency of heat to work cannot exceed unity, for otherwise the First Law would be contravened. Therefore the value of

A clue to the attainability of absolute zero can be obtained by considering the Carnot cycle with an ever-decreasing temperature of its cold sink. For a given quantity of heat to be absorbed from the hot source, the piston needs to travel out a definite distance from

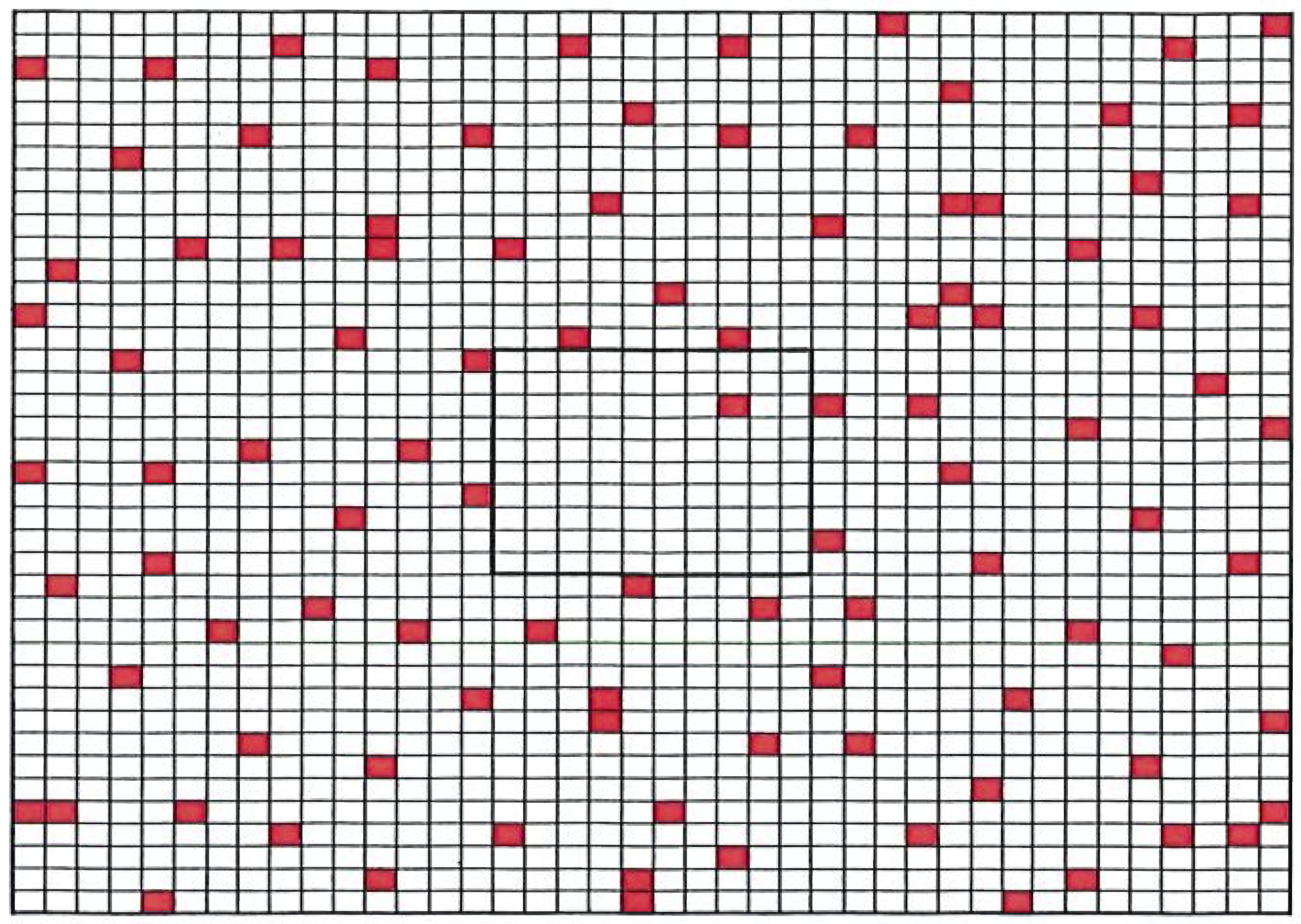

Carnot indicator diagrams for cycles with decreasing cold-sink temperatures (

The Third Law of thermodynamics generalizes this result. In a dejected kind of way it summarizes experience by the following remark:

Third Law: Absolute zero is unattainable in a finite number of steps.

This gives rise to the following sardonic summary of thermodynamics:

First Law: Heat can be converted into work.

Second Law: But completely only at absolute zero.

Third Law: And absolute zero is unattainable!

The End of the External#

We have traveled a long way in this chapter. First, we drew together the skeins of experience summarized by the Kelvin and the Clausius statements of the Second Law, saw that they were equivalent, and exposed two faces of Nature’s dissymmetry. We also saw that we could draw the two statements together by introducing a property of the system not readily discernable to the untutored eye, the entropy. We have seen that the entropy may be measured, and that it may be deployed to draw far-reaching conclusions about the nature of change. We have seen that the Universe is rolling uphill in entropy, and that it is thriving off the corruption of the quality of its energy.

Yet all this is superficial. We have been standing outside the world of events, but we have not yet discerned the deeper nature of change. Now is the time to descend into matter.

3 COLLAPSE INTO CHAOS#

Matter consists of atoms. That is the first step away from the superficialities of experience. Of course, we could burrow even further beneath superficiality, and regard matter as consisting of more (but perhaps not limitlessly more) fundamental entities. Perhaps Kelvin was right in his suspicion that the most fundamental aspect of the world is its eternal, elusive, and perhaps zero energy. But although the onion of matter can be peeled beyond the atom, that is where we stop; for in thermodynamics we are concerned with the changes that occur under the gentle persuasion of heat, and under most of the conditions we encounter, the energy supplied as we heat a system is not great enough to break open its atoms. The gentleness of the domain of thermodynamics is why it was among the first of scientists’ targets: only as increasingly energetic methods of exploration and destruction became available were other targets opened to inspection, and in turn, as the vigor of wars increased, so the internal structure of the atom, the nucleus, and the nucleons became a part of science. Heat, although it may burn and sear, is largely gentle to atoms.

The concept of the atom, although it originated with the Greeks, began to be convincing during the early nineteenth century, and came to full fruition in the early twentieth. As it grew, there developed the realization that although thermodynamics was an increasingly elegant, logical, and self-sufficient subject, it would remain incomplete until its relation to the atomic model of matter had been established. There was some opposition to this view; but support for it came from (among others) Clausius, who identified the nature of heat and work in atomic terms, and set alight the flame that Boltzmann soon was to shine on the world.

Although we have been speaking of atoms, in many of the applications of thermodynamics molecules also play an important role, as do ions, which are atoms or molecules that carry an electric charge. In order to cover all these species, we shall in general speak of particles.

Inside Energy#

As a first step into matter we must refine our understanding of energy by recalling some elementary physics. In particular, we should recall that a particle may possess energy by virtue of its location and its motion. The former is called its potential energy; the latter is its kinetic energy.

A particle in the Earth’s gravitational field has a potential energy that depends on its height: the higher it is, the greater its potential energy. Likewise, a spring has a potential energy that depends on its degree of extension or compression. Charged particles near each other have a potential energy by virtue of their electrostatic interaction. Atoms near each other have a potential energy by virtue of their interaction (largely the electrostatic interactions between their nuclei and their electrons).

A moving particle possesses kinetic energy: the faster it goes, the greater its kinetic energy. A stationary particle possesses no kinetic energy. A heavy particle moving quickly, like a cannonball (or, in more modern terms, a proton in an accelerator), possesses a great store of energy in its motion.

The most important property of the total energy of a particle (the sum of its potential and kinetic energies) is that it is constant in the absence of externally applied forces. This is the law of the conservation of energy, which moved to the center of the stage as the importance of energy as a unifying concept was recognized during the nineteenth century. It accounts for the motion of everyday particles like baseballs and boulders, and applies to particles the size of atoms (subject to some subtle restrictions of that great clarifier, the quantum theory). For instance, the law readily accounts for the motion of a pendulum: there is a periodic conversion from potential to kinetic energy as the bob swings from its high, stationary turning point, moves quickly (with high kinetic energy) through the region of lowest potential energy (at the lowest point of its swing), and then climbs more and more slowly to its next turning point. Potential and kinetic energy are equivalent, in the sense that one may readily be changed into the other; their sum, in an isolated object, remains the same.

Intrinsic to the soul of thermodynamics is the fact that it deals with vast numbers of particles. A typical yardstick to keep in mind is Avogadro’s number. Its value is about